Hi,

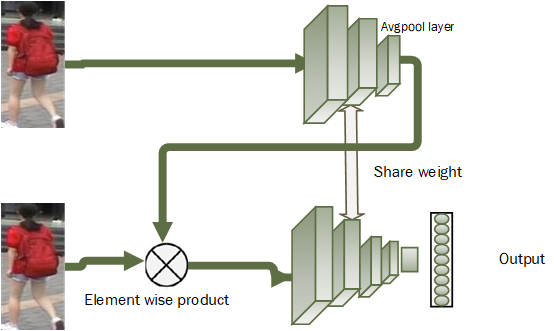

I have a fine-tuned ResNet model, but I have no idea how I can do to share a weight for this architecture!

Basically, the idea is to share the weight only for the base_network.

Thank you<

class ResNetModel(nn.Module):

def __init__(self, class_num):

super(ResNetModel, self).__init__()

# avg pooling to global pooling

model_ft.avgpool = nn.AdaptiveAvgPool2d((1, 1))

num_ftrs = model_ft.fc.in_features # extract feature parameters of fully collected layers

add_block = []

num_bottleneck = 512

add_block += [nn.Linear(num_ftrs,

num_bottleneck)] # add a linear layer, batchnorm layer, leakyrelu layer and dropout layer

add_block += [nn.BatchNorm1d(num_bottleneck)]

add_block += [nn.LeakyReLU(0.1)]

add_block += [nn.Dropout(p=0.5)] # default dropout rate 0.5

# transforms.CenterCrop(224),

add_block = nn.Sequential(*add_block)

add_block.apply(weights_init_kaiming)

model_ft.fc = add_block

self.model = model_ft

classifier = []

classifier += [nn.Linear(num_bottleneck, class_num)] # class_num classification

classifier = nn.Sequential(*classifier)

classifier.apply(weights_init_classifier)

self.classifier = classifier

def forward(self, x):

x = self.model(x)

x = self.classifier(x)

return x