Hi

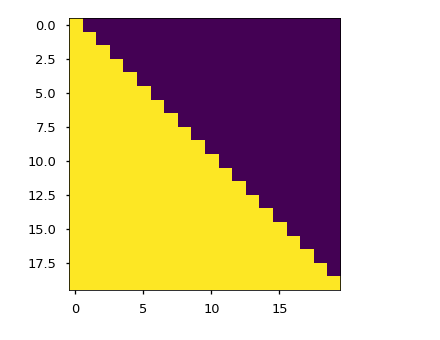

I like to train a language model and want to use transformer for it. I have heard that masks in the dcoders are very importnat while training, for example some mask like this is used in training models like bert in decoder.

I was wondering if I have to define them or there are already in the model by default?