It’s important to understand the async behavior w.r.t. the host and device.

A proper profile helps visualizing your code (I’ve added warmup iterations).

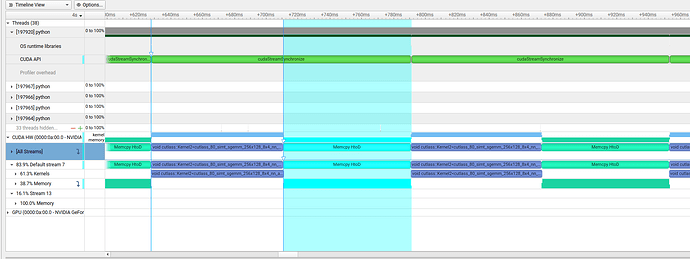

In the first part of the code the copies are synchronous, so the CPU waits until the operation finishes before launching the matmul kernel.

In this section of the Nsight Systems profile you can see the alternating kernel execution (bottom row). The top row shows the launch of the copy kernel followed by a sync, and the matmul kernel launch (hard to see as the sync takes a lot more time than the launches):

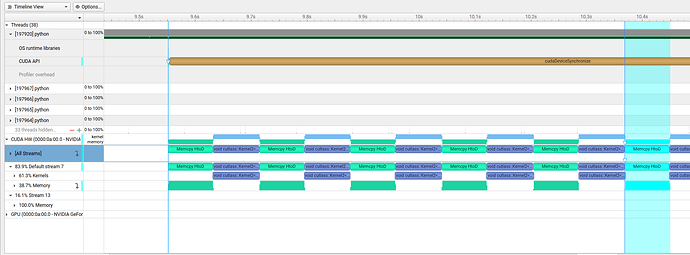

The second code snippet uses async kernel launches (by using pinned memory and non_blocking=True) but the kernel execution on the GPU will still be alternating, since launches are stream ordered and since you are using the default stream only:

You can see here that all kernel launches are packed together on the top row on the left followed by a single sync.

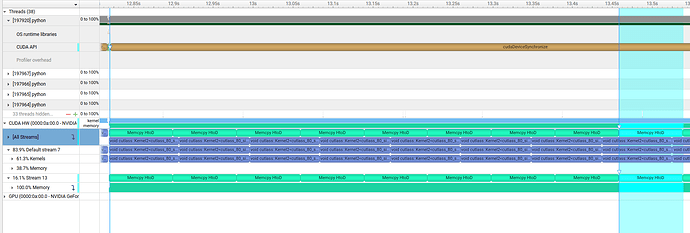

Now using custom CUDA streams allows us to also overlap the device copies and execution:

s = torch.cuda.Stream()

# warmup

for _ in range(10):

with torch.cuda.stream(s):

c.to('cuda:0', non_blocking=True) # Asynchronous

_ = torch.matmul(a, b) # Perform a large matrix operation

# Test with non_blocking=True

start_event.record()

for _ in range(10):

with torch.cuda.stream(s):

c.to('cuda:0', non_blocking=True) # Asynchronous

_ = torch.matmul(a, b) # Perform a large matrix operation

end_event.record()

torch.cuda.synchronize()

time_non_blocking_true = start_event.elapsed_time(end_event)

print(f"Time with non_blocking=True: {time_non_blocking_true:.3f} ms")

Since you are using a custom stream now, you would need to take care of the needed synchronization before consuming this data. Take a look at the CUDA streams docs for more information.