Size mismatched.

I am a beginner and I try to use simple classifier I found online and use my own custom image.

I have 2 class of RGB image with size of 224x224.

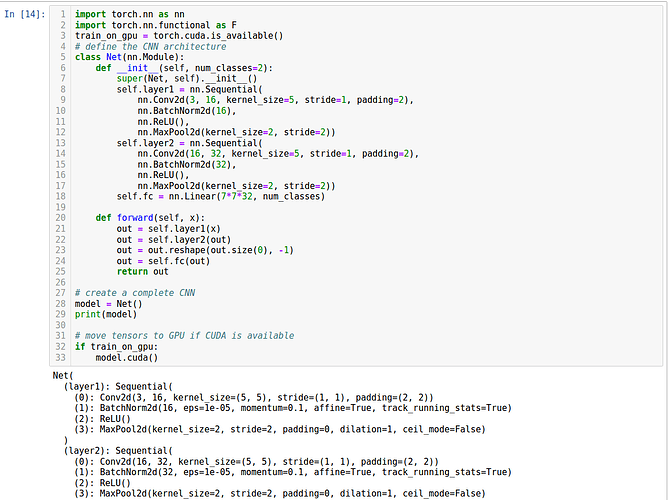

When I run below code I get an mismatched size.

May I know how the number came from?

RuntimeError: size mismatch, m1: [2 x 119072], m2: [1568 x 2] at /pytorch/aten/src/THC/generic/THCTensorMathBlas.cu:290

I know 10 came from batch size but how about 100352?

Below is my code and my error

Hi,

can you next time please provide your code in a code block and not post a screenshot of it.

Makes it easier to debug

Your problem is most likely with the input dimension of your fully connected layer self.fc = nn.Linear(7*7*32, num_classes)

It seams like this network was made to run with RGB images of size 28x28. But since you provide it with images of size 224x224 the feature maps that get get passed into the fc layer are not of size 7x7x32 but 56x56x32 (if my calculations are correct).

So to make it work again change:

self.fc = nn.Linear(7*7*32, num_classes)

to

self.fc = nn.Linear(56*56*32, num_classes)

Hi @RaLo4,

Thanks for your help.

Noted on the code part.

May I know how did (7732) derived from?

or from my case (565632)?

How did from conv2(16,32,5) become (565632).

From my understanding the output of conv2 became input of fc.

Or am I wrong?

yes you are correct. well, there is still the batch norm, relu and maxpool in between but more or less, yes.

it help to calculate and trace the shape of your image tensor throughout your network.

At the point your image tensor leaves self.layer2 your image tensor is of shape: batch_size x 32 x 56 x 56. In this shape you could just pass it through another conv layer. But if you want to pass it through an fully connected layer, you need to flatten this tensor first.

Your

out = out.reshape(out.size(0), -1)

does this for you.

So if you flatten your tensor it becomes batch_size x 32 * 56 * 56 or batch_size x 100352

I explained this recently using some example code. If you want to check this out here is a link.

If you are still not quite getting it or have any more questions, please feel free to ask more!