I am learning PyTorch using the d2l online book as a reference and I’m wanting to train a simple single input/single output model that looks like

y = ReLu(w_1x+b_1) + ReLu(w_2x+b_2), where x is the input, and w_i and b_i for i=1,2 are parameters.

If someone can review the code below and point out why the parameter vector is not converging to a value that makes sense I would really appreciate it. Originally I thought that the line l.sum().backward() was the culprit but I coded it manually by using the individual components (similar to the expression in the model function) and got the same result. Also, I have modified the training data to have only 2 linear parts (thinking that it would be easier for this model to approximate it) but the model still failed. Thanks so much.

import torch

import random

from matplotlib import pyplot as plt

from IPython import display

def make_data(mean, variance, num_examples):

# Make a piecewise linear data distribution

x = torch.normal(0, 5, (num_examples, 1))

y = torch.zeros(num_examples, 1)

i = 0

for xi in x:

if xi < 0:

y[i] = xi + 2

elif xi >= 0 and xi < 5:

y[i] = 2 * xi - 1

else:

y[i] = -xi + 1

i = i + 1

return x, y

def data_iter(batch_size, features, labels):

# Takes a batch size, a matrix of features, and a vector of labels,

# yielding minibatches of the size batch_size

num_examples = len(features)

indices = list(range(num_examples))

random.shuffle(indices)

for i in range(0, num_examples, batch_size):

batch_indices = torch.tensor(indices[i:min(i +

batch_size, num_examples)])

yield features[batch_indices], labels[batch_indices]

def relu(X):

a = torch.zeros_like(X)

return torch.max(X, a)

def model(features, w, b):

# One input, one output model

return relu(w[0] * features + b[0]) + relu(w[1] * features + b[1])

def squared_loss(y_hat, y):

return (y_hat - y.reshape(y_hat.shape))**2 / 2

def sgd(params, lr, batch_size):

# Minibatch stochastic gradient descent

with torch.no_grad():

for param in params:

param -= lr * param.grad / batch_size

param.grad.zero_()

num_examples = 10

features, labels = make_data(0, 1, num_examples)

# Initialize parameters, w and b

w = torch.normal(0, 0.01, size=(2, 1), requires_grad=True)

b = torch.zeros(size=(2, 1), requires_grad=True)

batch_size = 5

lr = 0.001 # learning rate

num_epochs = 10

net = model

loss = squared_loss

# Training Loop

for epoch in range(num_epochs):

for X, y in data_iter(batch_size, features, labels):

l = loss(net(X, w, b), y)

l.sum().backward()

sgd([w, b], lr, batch_size)

with torch.no_grad():

train_l = loss(net(features, w, b), labels)

print(f'epoch {epoch + 1}, loss {float(train_l.mean()):f}')

print(f"w: {w} b: {b}")

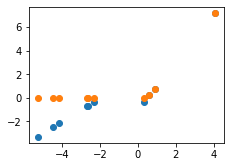

labelshat = model(features, w, b)

display.set_matplotlib_formats('svg')

plt.rcParams['figure.figsize'] = (3.5, 2.5)

fig, ax = plt.subplots()

ax.scatter(features.detach().numpy(), labels.detach().numpy())

ax.scatter(features.detach().numpy(), labelshat.detach().numpy())

plt.show()