I am trying to write a very simple selection algorithm:

- With a given NN generate a set of weights and a fitness value for N specimens

- Select 20% of most fit specimens

- Pass their weights back to the NN

I am stuck on passing the weights back to the NN model. There are several ways I have seen it being implemented, but none of them are for a class, they are for simple single layer structures. Also, non of them are iterative, they are for single custom weights initialization at the beginning of training.

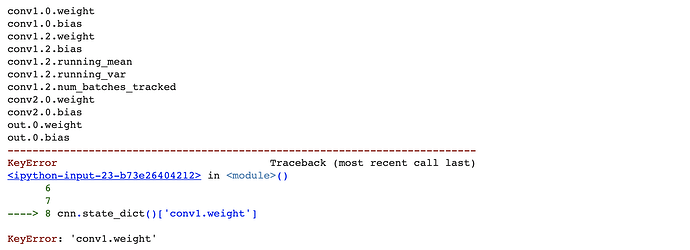

Here is my code right now. It generates a genome and a fitness for 10 samples and selects two best fit ones. I have tried to implement passing weights set to “1” to first layer, but it generates an error below the code. And even if it works, it is not what I need. I need to pass a tensor elite_c1[1, 0:100352] to the first layer and elite_c1[1, 100353:] to second layer.

import torch

from pylab import *

from torchvision import datasets

from torchvision.transforms import ToTensor

from torch.utils.data import DataLoader

from torch.autograd import Variable

import numpy as np

import torch.nn as nn

import math

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

train_data = datasets.MNIST(

root = 'data',

train = True,

transform = ToTensor(),

download = True,

)

test_data = datasets.MNIST(

root = 'data',

train = False,

transform = ToTensor()

)

batch_size = 32

loaders = {

'train' : torch.utils.data.DataLoader(train_data,

batch_size=batch_size,

shuffle=True,

num_workers=1),

'test' : torch.utils.data.DataLoader(test_data,

batch_size=batch_size,

shuffle=True,

num_workers=1),

}

loaders

class CNN(nn.Module):

def __init__(self):

super(CNN, self).__init__()

self.conv1 = nn.Sequential( # number of weights 100352

nn.Conv2d(

in_channels=1,

out_channels=16,

kernel_size=5,

stride=1,

padding=2,

),

nn.MaxPool2d(2,2),

nn.BatchNorm2d(16),

nn.Flatten()

)

self.conv2 = nn.Sequential( # number of weights 8192

nn.Linear(16 * 14 * 14, batch_size * 8),

nn.ReLU(),

nn.Flatten()

)

self.out = nn.Sequential(nn.Linear(batch_size*8, 10), nn.Softmax(dim=1))

self.weights_initialization()

def forward(self, x):

x = self.conv1(x)

x = self.conv2(x)

output = self.out(x)

return output

def weights_initialization(self):

for m in self.modules():

if isinstance(m, nn.Linear):

nn.init.xavier_normal_(m.weight)

nn.init.constant_(m.bias, 0)

cnn = CNN()

activation = {}

def getActivation(name):

def hook(model, input, output):

activation[name] = output.detach()

return hook

h1 = cnn.conv1.register_forward_hook(getActivation('conv1'))

h2 = cnn.conv2.register_forward_hook(getActivation('conv2'))

h3 = cnn.out.register_forward_hook(getActivation('out'))

images, labels = next(iter(loaders['train']))

num_epochs = 1

chromosome = []

fitness = 0

def single_run(images, labels, num_epochs, chromosome, fitness):

def train(num_epochs, cnn, loaders):

cnn.train()

for i, (images, labels) in enumerate(loaders['train']):

b_x = Variable(images) # batch x

b_y = Variable(labels) # batch y

output = cnn(b_x)[0]

out_ten = activation['out']

layer_weights_1 = activation['conv1'].numpy().flatten()

layer_weights_2 = activation['conv2'].numpy().flatten()

chromosome = np.append(layer_weights_1,layer_weights_2)

#fitness

for j in range(len(out_ten)):

if out_ten[j].argmax().item() == labels[j].item():

fitness += 1

return fitness, chromosome

fitness1, chromosome1 = single_run(images, labels,num_epochs, chromosome, fitness)

population_c = []

population_f = []

size=10

#generate 10 samples

def populate(size, population_c,population_f):

for i in range(size):

population_f = np.append(population_f, single_run(images, labels,num_epochs, chromosome, fitness)[0])

if i==0: population_c = np.append(population_c, single_run(images, labels,num_epochs, chromosome, fitness)[1])

else:

population_c = np.vstack((population_c, single_run(images, labels,num_epochs, chromosome, fitness)[1]))

return population_c, population_f

population_c1, population_f1 = populate(size, population_c, population_f)

#choose the weights that correspond to the best performance

def selection(population_c, population_f):

elite = int(ceil(len(population_f) * 0.2))

elite_ind = np.argpartition(population_f, -elite)[-elite:]

elite_c = np.take(population_c, elite_ind, 0)

elite_f = np.take(population_f, elite_ind, 0)

return elite_c, elite_f

elite_c1, elite_f1 = selection(population_c1, population_f1)

elite_c1[1, 0:100352] #weights for layer 1 of sample 1

with torch.no_grad():

for layer in cnn.state_dict():

print(layer)

cnn.state_dict()[layer].data.fill_(1)

cnn.state_dict()['conv1.weight']

Error: