Hi! I’m implementing a basic time-series autoencoder in PyTorch, according to a tutorial in Keras, and would appreciate guidance on a PyTorch interpretation. I think this would also be useful for other people looking through this tutorial. Thanks all! HL.

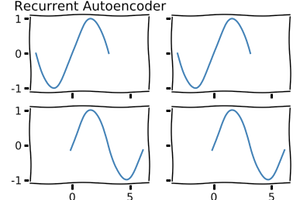

In the tutorial, pairs of short segments of sin waves (10 time steps each) are fed through a simple autoencoder (LSTM/Repeat/LSTM) in order to forecast 10 time steps. The latent space chosen is 2 parameters, as the experiment attempts to learn a notion of phase.

Keras implementation from the website:

from keras.layers import LSTM, RepeatVector

...

m = Sequential()

m.add(LSTM(2, input_shape=(10, 2)))

m.add(RepeatVector(10))

m.add(LSTM(2, return_sequences=True))

...

I think the below is along the right lines for a PyTorch equivalent - but I have some questions inline:

seq_len = 10

input_dim = 2

latent_dim = 2

batches = 32 # Arbitrary

...

class AE(nn.Module):

...

def __init__(self, input_dim, latent_dim):

...

self.enc = nn.LSTM(input_dim, latent_dim)

self.dec = nn.LSTM(latent_dim, input_dim)

...

def forward(self, x_batch):

# From [batches x seq_len x input_dim] to [seq_len x batches x input_dim]

x_batch = x_batch.permute(1,0,2)

# Extract last hidden, the 'bottleneck'.

_, (prev_hidden, _) = self.enc(x_batch)

# (Q) Is this repeat correct? I also wonder if this will fail for multiple layers.

encoded = prev_hidden.repeat(x_batch.shape)

# Pass through decoder for output [seq_len x batches x input_dim]

out, _ = self.dec(encoded)

# (Q) Maybe, at this stage, there should be a Linear layer instead of just

# returning the hidden state from the decoding LSTM?

return out

....

I suspect it should be easy enough to adapt the encoder above (self.enc) into some (multi-)layered combination of Linear/Conv1D layers; but the LSTM interaction is confusing for me, and I suspect its quite simple.