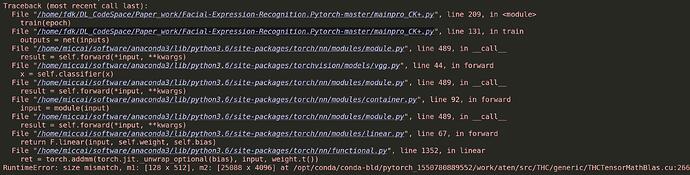

i want to use the ptr-trainVGG19 , to initialization my parameter , and then to train my model. but got this problem. help me

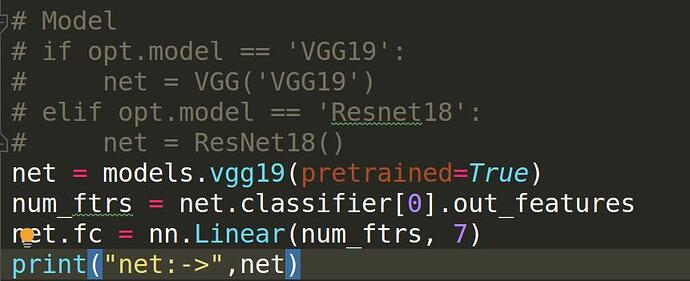

You should try something along the lines of:

num_ftrs = net.classifier[-1].in_features

net.classifier[-1] = nn.Linear(num_ftrs, 7)

This will make the model output raw scores for seven classes instead of the default 1000.

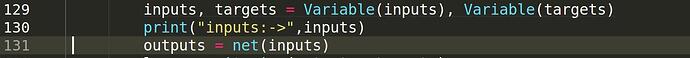

thanks ,but it doesn’t work. i guess it could be caused by my img’size

How large is each image you pass to the model?

torchvision.models.vgg uses an adaptive pooling layer which should take variable sized inputs. However, if you are using an older version, your model might be missing this layer.

my picture is 44 x 44 and 3channels

In that case @crowsonkb’s suggestion should work fine:

model = models.vgg19()

num_ftrs = model.classifier[-1].in_features

model.classifier[-1] = nn.Linear(num_ftrs, 7)

x = torch.randn(1, 3, 44, 44)

output = model(x)

print(output.shape)

> torch.Size([1, 7])