Hi!

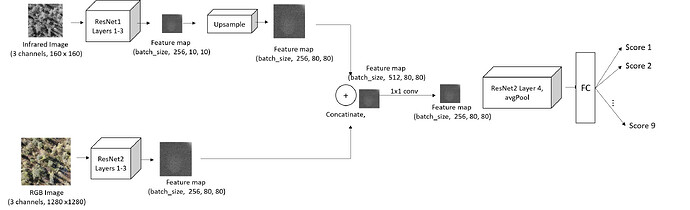

I am trying to merge two pretrained ResNet models. ResNet1 is trained on one set of images of input size 160x160 and ResNet2 is trained on another set of images of size (1280,1280). I then try to ensemble the two models as shown in the diagram below:

The feature map after concatenation and 1x1conv layer fully has the same dimensions as the original input to the final convolutional layer and fully connected layer as the original ResNet2 so I don’t understand why there would be a size mismatch error. Help would be greatly appreciated.

Here is my model code. Print outputs are given for batch size 3

class ResNetEnsembleInfraredRGB(nn.Module):

def __init__(self, num_classes, ResNetRGB, ResNetIR):

super().__init__()

print(ResNetRGB)

self.ResNetRGB = ResNetRGB

self.ResNetIR = ResNetIR

for param in self.ResNetRGB.parameters(): # Freeze all parameters

param.requires_grad = False

for param in self.ResNetRGB.model.layer4.parameters():

param.requires_grad = True

for param in self.ResNetRGB.model.layer3.parameters():

param.requires_grad = True

for param in self.ResNetIR.parameters(): # Freeze all parameters

param.requires_grad = False

#ResNetIR layer for will not be used

for param in self.ResNetRGB.model.layer3.parameters():

param.requires_grad = True

self.upsample = nn.Sequential( #Double size 3 times

nn.ConvTranspose2d(256, 256, 3, stride=2, padding=1, output_padding=1),

nn.ConvTranspose2d(256, 256, 3, stride=2, padding=1, output_padding=1),

nn.ConvTranspose2d(256, 256, 3, stride=2, padding=1, output_padding=1),

)

#After concat of layers from infrared and rgb. do 1x1 conv layer to reduce dimensions.

self.model_Head = nn.Sequential(

nn.Conv2d(512, 256, kernel_size=1, stride=1, padding=0,bias=False),

self.ResNetRGB.model.layer4,

self.ResNetRGB.model.avgpool,

)

self.classifer = self.ResNetRGB.model.fc

def forward ( self , x_rgb, x_infrared):

#rgb_base

x_rgb = self.ResNetRGB.model.conv1(x_rgb)

x_rgb = self.ResNetRGB.model.bn1(x_rgb)

x_rgb = self.ResNetRGB.model.relu(x_rgb)

x_rgb = self.ResNetRGB.model.maxpool(x_rgb)

x_rgb = self.ResNetRGB.model.layer1(x_rgb)

x_rgb = self.ResNetRGB.model.layer2(x_rgb)

x_rgb = self.ResNetRGB.model.layer3(x_rgb)

print()

print('x_rgb', x_rgb.size()) #torch.Size([3, 256, 80, 80])

#infrared_base

x_infrared = self.ResNetIR.model.conv1(x_infrared)

x_infrared = self.ResNetIR.model.bn1(x_infrared)

x_infrared = self.ResNetIR.model.relu(x_infrared)

x_infrared = self.ResNetIR.model.maxpool(x_infrared)

x_infrared = self.ResNetIR.model.layer1(x_infrared)

x_infrared = self.ResNetIR.model.layer2(x_infrared)

x_infrared = self.ResNetIR.model.layer3(x_infrared)

# give outputs the same dimension... scale up infrared or downscale rgb

print('x_infrared', x_infrared.size()) # torch.Size([3, 256, 10, 10])

x_infrared= self.upsample(x_infrared)

print('x_infrared', x_infrared.size()) #torch.Size([3, 256, 80, 80])

x = torch.cat((x_rgb, x_infrared), dim=1)

print('x cat', x.size()) #torch.Size([3, 512, 80, 80])

x = self.model_Head(x)

print('x head', x.size()) #torch.Size([3, 512, 1, 1])

x = self.classifer(x)

print('x classifier', x.size()) ## ERROR

return x

The full stacktrace looks as follows:

Traceback (most recent call last):

File "train_Ensemble.py", line 205, in <module>

trainer.train()

File "train_Ensemble.py", line 152, in train

self.validation_epoch(-1)

File "train_Ensemble.py", line 96, in validation_epoch

self.dataloader_train, self.model, self.loss_criterion, which_gpu=self.which_gpu, ensemble_learning=True

File "/home/thaikari/mmdetection/tdt_4265_code/utils.py", line 74, in compute_loss_and_accuracy

output_probs = model(X_batch_rgb, X_batch_infrared)

File "/home/thaikari/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/nn/modules/module.py", line 541, in __call__

result = self.forward(*input, **kwargs)

File "/home/thaikari/mmdetection/tdt_4265_code/resnet.py", line 135, in forward

x = self.classifer(x)

File "/home/thaikari/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/nn/modules/module.py", line 541, in __call__

result = self.forward(*input, **kwargs)

File "/home/thaikari/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/nn/modules/linear.py", line 87, in forward

return F.linear(input, self.weight, self.bias)

File "/home/thaikari/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/nn/functional.py", line 1372, in linear

output = input.matmul(weight.t())

RuntimeError: size mismatch, m1: [1536 x 1], m2: [512 x 9] at /opt/conda/conda-bld/pytorch_1570910687650/work/aten/src/THC/generic/THCTensorMathBlas.cu:290