Hello,

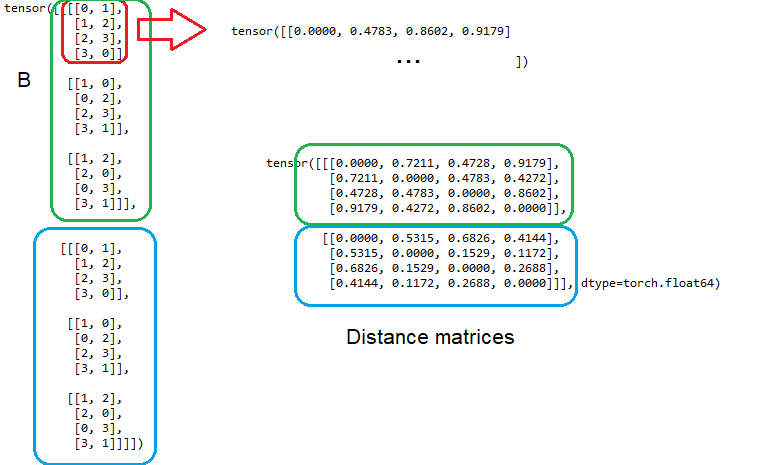

I am implementing a Q-Learning algorithm for a routing problem at the moment, and I have come across a problem I don’t currently know how to solve. I have a 3d tensor that holds mutiple distance matrices of 2 dimensions (so batchsize x coord1 x coord2). Now I have a second tensor “B” that has batches of sequences of indices. Each row in each batch of “B” has indices as tuples, which refer to one cell in the distance matrix of the same batch. The dimension is hence batchsize x rows x ntuples x 2 Now I want to select for each row in “B” (which consists of “n” tuples) the corresponding entries in the distance matrix of the same batch. I think torch.gather cannot help me there.

A small example of batchsize 2 could look like this:

Basically, each list of tuples should result in a flat list of the values from the corresponding distance matrix, so the output dimension is batchsize x rows x ntuples , since each index tuple resolves to one entry in the distance matrix. It is important that each batch of “B” should access the correct distance matrix, which is on the same dimension. I hope it was clear enough what I wanted to reach.

Any idea or help on how to solve this is well appreciated!

Thank you in advance.