I am new to Pytorch. I am trying to replicate a simple Keras LSTM model in Pytorch. Two model takes in the exact same data but the Pytorch implementation produces a significantly worse result.

In my toy project, I am doing time series prediction with Google stock price. Using past 60-day prices to predict next Open price.

Complete code available in Kaggle kernel: Buggy Pytorch Model | Kaggle

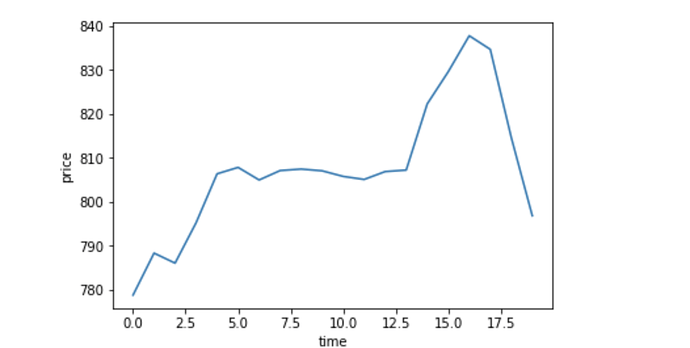

Groud Truth(Google stock price in 2017 Jan)

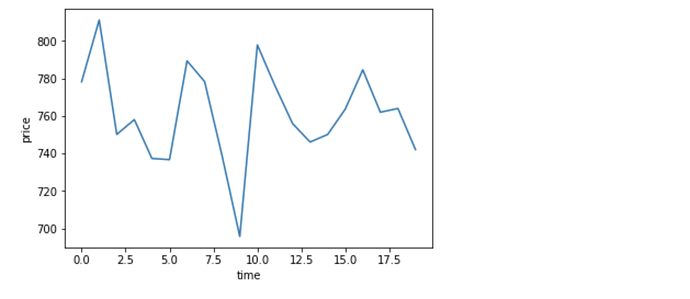

I would like to know what mistakes/misconfiguration did I make in my Pytorch implementation and Why I am not able to reproduce the keras model in my pytorch code . Thanks for the help !

regressor = Sequential()

regressor.add(LSTM(units=50, return_sequences = True, input_shape = (X_train.shape[1], 1)))

regressor.add(Dropout(0.2))

regressor.add(LSTM(units=50, return_sequences = True))

regressor.add(Dropout(0.2))

regressor.add(LSTM(units=50, return_sequences = True))

regressor.add(Dropout(0.2))

regressor.add(LSTM(units = 50))

regressor.add(Dropout(0.2))

regressor.add(Dense(units = 1))

regressor.compile(optimizer = "adam", loss = 'mean_squared_error')

regressor.summary()

regressor.fit(X_train,y_train, epochs=100, batch_size = 32)

Pytorch model and training loop:

def my_training_loop(m, dl, epochs):

opt = optim.Adam(m.parameters())

crit = nn.MSELoss()

for epoch in range(epochs):

accu_loss = 0

batch_count = 0

for i, (train_x, train_y) in enumerate(dl):

x = Variable(train_x.cuda())

y = train_y.cuda()

opt.zero_grad()

preds = m(x)

loss = crit(preds, y)

accu_loss += loss.item()

batch_count += 1

loss.backward()

opt.step()

print(f'Epoch: {epoch}. Loss: {accu_loss/batch_count}')

class MyLSTM(nn.Module):

def __init__(self, input_dim, hidden_dim, layer_dim, output_dim):

super().__init__()

self.layer_dim = layer_dim

self.hidden_dim = hidden_dim

self.lstm = nn.LSTM(input_size=input_dim, hidden_size=hidden_dim,\

num_layers=layer_dim,bias=True, batch_first = True,dropout=0.2)

self.dropout = nn.Dropout(p=0.2)

self.fc = nn.Linear(in_features=hidden_dim, out_features=output_dim)

def forward(self, x):

h0 = Variable(torch.zeros((self.layer_dim, x.size(0), self.hidden_dim)).cuda())

c0 = Variable(torch.zeros((self.layer_dim, x.size(0), self.hidden_dim)).cuda())

o, h = self.lstm(x, (h0,c0))

o = self.fc(self.dropout(o[:,-1,:]))

return o