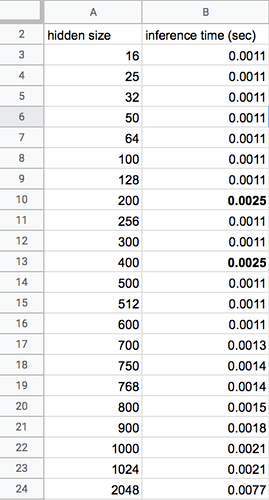

I was experimenting the GPU inference time using different sizes of LSTM. It turns out that when the LSTM hidden size is 200 and 400, the inference time is extremely slow (even much slower than hidden size =700). Below are the code and the relationship between hidden_size and average_sequence_inference_time (in seconds).

Other info:

GPU: 1 RTX2070

torch version: 1.6.0

Eval setting: only 1 example per batch.

import torch

import numpy as np

import time

import torch.nn as nn

class LSTMDummy(nn.Module):

def __init__(self, embedding_size, input_size, hidden_size,

n_layers, dropout_p):

super(LSTMDummy, self).__init__()

self.embedding = nn.Embedding(embedding_size, input_size)

self.lstm = nn.LSTM(input_size, hidden_size,

num_layers=n_layers,

dropout=dropout_p,

bidirectional=True,

batch_first=True)

def forward(self, tensor):

torch.cuda.synchronize()

start_time = time.time()

lstm_input = self.embedding(tensor)

output = self.lstm(lstm_input)

torch.cuda.synchronize()

end_time = time.time()

return end_time - start_time

class CheckGPUTensorMul:

device = torch.device("cuda:0")

n_exp = 10000

sizes = [16, 25, 32, 50, 64, 100, 128, 200, 256, 300, 400, 500, 512, 600, 700, 750, 768, 800, 900, 1000, 1024, 2048]

sizes2 = [200, 750]

@classmethod

def calculate_time_lstm(cls):

n_vocab = 10000

seq_len = 20

input_tensors = [torch.tensor([[np.random.randint(0, n_vocab)] * seq_len], dtype=torch.int64).to(cls.device)

for i in range(cls.n_exp)] # n_exp = 10,000

for size in cls.sizes: # 16, 32, 50, 64 ...

time_list = []

lstm_model = LSTMDummy(

embedding_size=n_vocab,

input_size=100,

hidden_size=size,

n_layers=1,

dropout_p=0.0

)

lstm_model.to(cls.device)

lstm_model.eval()

# Warm up

for input_tensor in input_tensors[:100]:

time_ = lstm_model(input_tensor)

for input_tensor in input_tensors:

time_ = lstm_model(input_tensor)

time_list.append(time_)

print("=" * 40)

print("size:", size)

print("avg time:", sum(time_list)/len(time_list))