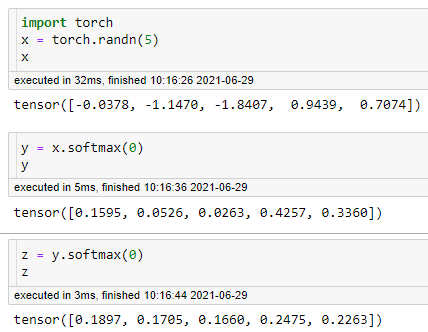

Do keep in mind that CrossEntropyLoss does a softmax for you. (It’s actually a LogSoftmax + NLLLoss combined into one function, see CrossEntropyLoss — PyTorch 1.9.0 documentation). Doing a Softmax activation before cross entropy is like doing it twice, which can cause the values to start to balance each other out as so:

As for your question, are you saying there’s no point in using softmax since your outputs without it are already between 0-1? I mean technically I guess you don’t, but using an activation function is a nice way to ensure bounds in case your network spits out something unexpected