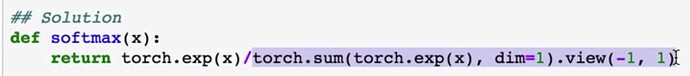

EXPLAINATION:

softmax that performs the softmax calculation and returns probability distributions for each example in the batch. Note that you’ll need to pay attention to the shapes when doing this. If you have a tensor a with shape (64, 10) and a tensor b with shape (64,) , doing a/b will give you an error because PyTorch will try to do the division across the columns (called broadcasting) but you’ll get a size mismatch. The way to think about this is for each of the 64 examples, you only want to divide by one value, the sum in the denominator. So you need b to have a shape of (64, 1) . This way PyTorch will divide the 10 values in each row of a by the one value in each row of b . Pay attention to how you take the sum as well. You’ll need to define the dim keyword in torch.sum . Setting dim=0 takes the sum across the rows while dim=1 takes the sum across the columns.

DOUBT:

64X10 is shape of a and 64 is dimension of b then why a/b is defined then why when 64x1 is dimension of b then it can be divided and what will be shape of output.

Anything resource supporting this concept to understand will also be apprictiated.

Thanks