Hi all,

While using the visualize tool of szagoruyko I have found that, for specific functions, there are more variables in the computation graph than those that I had defined. In particular, I’m referring to these two examples:

a = Variable(torch.rand(3))

b = nn.Parameter(torch.rand(2, 3))

y = b.mv(a)

make_dot(y)

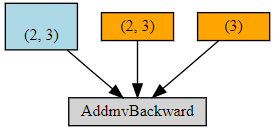

This one gives me this graph

Blue rectangles represent parameters, orange ones represent variables, and grey ones are operations. Another simple example is the following:

c = nn.Parameter(torch.rand(2))

z = F.linear(a, b, c)

make_dot(z)

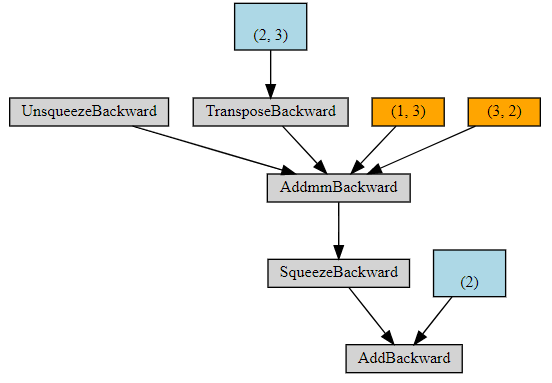

Which gives me the following graph

As you might see, there are two variables, of sizes (2, 3) in the first example and (3, 2) in the second one, that I did not define but are nonetheless being used to perform the computations.

Does any one know what are these tensors? Can they alter the gradients that I compute in a backward pass?

Thanks in advance