Ok. Something to bear in mind. I’m not sure that is exactly my situation though, although it could be; but I have multiple layers, and they are all dropping to zero.

I’m still adding instrumentation, to try to figure out what’s going on.

Something that I just found out, that is curious, though points towards a nan issue at the base, followed by some other unexpected behavior on the top, is that if I take .abs().max() over the weights, this stays non nan, until it drops to zero at some point. However, .abs().sum(), becomes nan at some point. I reckon what’s happening is:

- at least one weight probably is becoming

nan, combined iwth

- somehow,

nan isn’t propagating through .max()

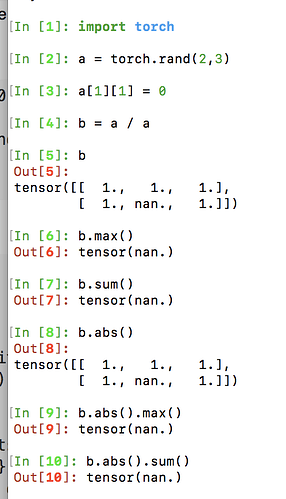

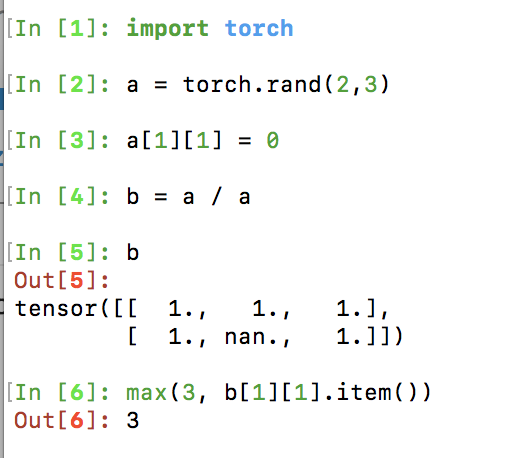

On the other hand, initial experimentation shows that .max() does propagate nans, so there’s something else going on. But I suspect nan is at the base of my actual issue somewhere.

what my code outputs, at the time of crashing out:

weight_abs_max=0.809 nonzeros=2926 absavg=0.127

...

weights look strange weight_abs_max=0.441 nonzeros=2926 absavg=nan

weights_sum nan

non_zero_weights 2926

The checking code:

abs_max = 0

non_zero_weights = 0

weights_sum = 0

for _p in params:

abs_max = max(abs_max, _p.abs().max().item())

_non_zero_weights = _p.nonzero().size()[0]

non_zero_weights += _non_zero_weights

weights_sum += _p.abs().sum().item()

weights_avg = weights_sum / non_zero_weights

res_string = f'weight_abs_max={abs_max:.3f} nonzeros={non_zero_weights} absavg={weights_avg:.3f}'

if abs_max < 1e-8 or non_zero_weights == 0 or weights_avg != weights_avg or math.isnan(weights_avg):

print('weights look strange', res_string)

print('weights_sum', weights_sum)

print('non_zero_weights', non_zero_weights)

if self.dumper is not None:

self.dumper.dump(objects_to_dump)

raise Exception('weights abs max < 1e-8')

Edit, oh yes, and evidence that, normally .max does in fact propagate nans:

I mean, it’ll take several thousand steps, without being zero. then Bam! all zeros )

I mean, it’ll take several thousand steps, without being zero. then Bam! all zeros )

)

)