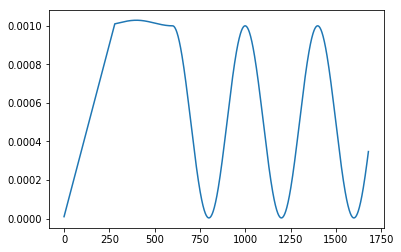

I tried to use both adujusting lr methods, wanted to increase lr using lambdaLR from min_lr to max_lr(warm up), and then apply CosineAnnealingLR, but the learning rate seem not what I expected. it shows kind of ‘plateau’. Please kindly see below:

I attach my code below:

n_epochs = 10

# n_iter = len(train_loader)

n_iter = 28

max_lr = 1e-3; min_lr = 1e-5;

base_lr = max_lr / (n_epochs*n_iter)

bias = min_lr * (1/base_lr)

lr_ls = []

optimizer = torch.optim.Adam(model.parameters(), lr=base_lr)

lambda1 = lambda x: bias+x

scheduler = lr_scheduler.LambdaLR(optimizer, lr_lambda=lambda1)

j = 0

for epoch in range(n_epochs):

for _ in range(n_iter):

j+=1

lr_ls.append(scheduler.get_last_lr()[0])

optimizer.step()

scheduler.step(j)

n_epochs = 50

scheduler = lr_scheduler.CosineAnnealingLR(optimizer, T_max=200, eta_min=max_lr, last_epoch=j)

for epoch in range(n_epochs):

for _ in range(n_iter):

j+=1

lr_ls.append(scheduler.get_last_lr()[0])

optimizer.step()

scheduler.step()

plt.plot(lr_ls)

Actually, I guess I don’t totally understand CosineAnnealingLR param, last_epoch maybe.

Would you help me out?

I solved this problem by myself.

TL;DR: need to declare optimizer again with the last lr of previous optimizer

base_lr = optimizer.param_groups[0]['lr']

optimizer = torch.optim.Adam(model.parameters(), lr=base_lr)

Please refer to the correction:

n_epochs = 10

# n_iter = len(train_loader)

n_iter = 28

max_lr = 1e-3; min_lr = 1e-5;

base_lr = max_lr / (n_epochs*n_iter)

bias = min_lr * (1/base_lr)

lr_ls = []

optimizer = torch.optim.Adam(model.parameters(), lr=base_lr)

lambda1 = lambda x: bias+x

scheduler = lr_scheduler.LambdaLR(optimizer, lr_lambda=lambda1)

j = 0

for epoch in range(n_epochs):

for _ in range(n_iter):

j+=1

lr_ls.append(scheduler.get_last_lr()[0])

optimizer.step()

scheduler.step(j)

##################################### this part

base_lr = optimizer.param_groups[0]['lr']

optimizer = torch.optim.Adam(model.parameters(), lr=base_lr)

#####################################

n_epochs = 50

#scheduler = lr_scheduler.CosineAnnealingLR(optimizer, T_max=200, eta_min=0, last_epoch=-1)

scheduler = lr_scheduler.CosineAnnealingWarmRestarts(optimizer, T_0=200, T_mult=2, eta_min=min_lr)

for epoch in range(n_epochs):

for _ in range(n_iter):

j+=1

lr_ls.append(scheduler.get_last_lr()[0])

optimizer.step()

scheduler.step()

plt.plot(lr_ls, alpha=0.5)

plt.scatter(range(len(lr_ls)), lr_ls, s=0.3, c='r')