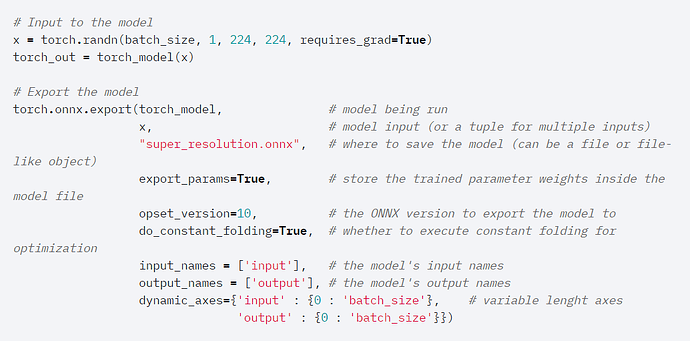

I am now using PyTorch to convert PyTorch model to Onnx model. When I use torch.onnx.export function, I find that this function’s second parameter needs model inputs (x # model input (or a tuple for multiple inputs)).

What value should I set for this parameter? Is this parameter a must and why this parameter is a must?

I think tracing is used under the hood so an example input is necessary to perform the trace and create the graph in order to export the ONNX model.

So this function torch.onnx.export tries to perform the trace in onnx model (super_resolution.onnx)? But I do not have to write down any input when using onnxruntime to save a model? It’s a torch to onnx necessary step?

I’m not deeply familiar with onnxruntime and don’t know how it’s working under the hood.

I assumed it’s just a runtime/serving platform and provides engines to execute the models, but might be wrong.