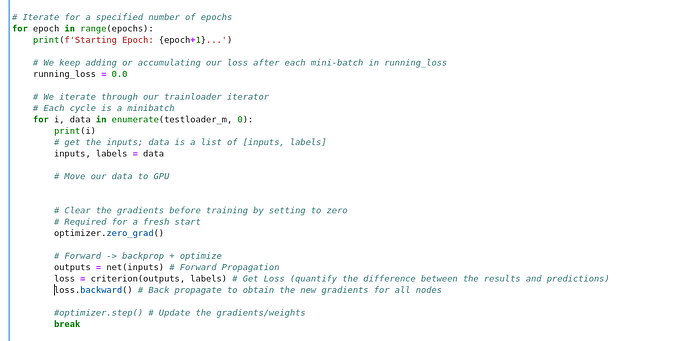

i need to get the gradient value of a loss function with respect to the weight(s) in a neural network while training it.

Hi,

After loss.backward(), you could loop over the net instance’s parameters and extract their grad attribute, like so:

for param in net.parameters():

print(param.grad)

1 Like