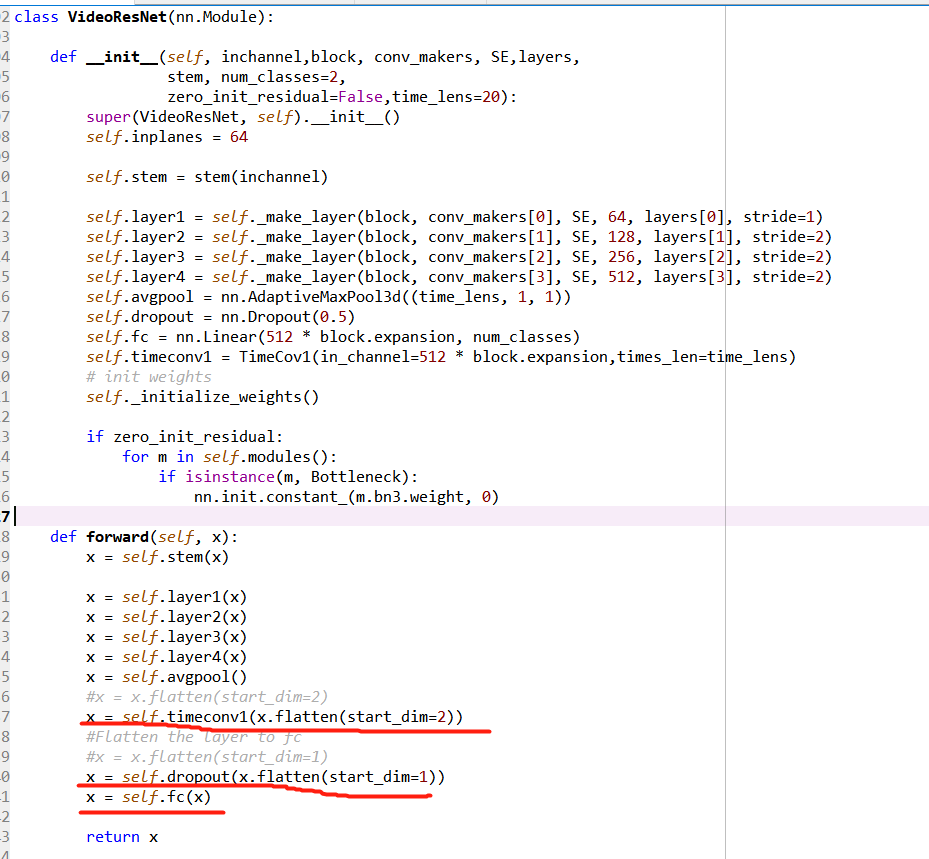

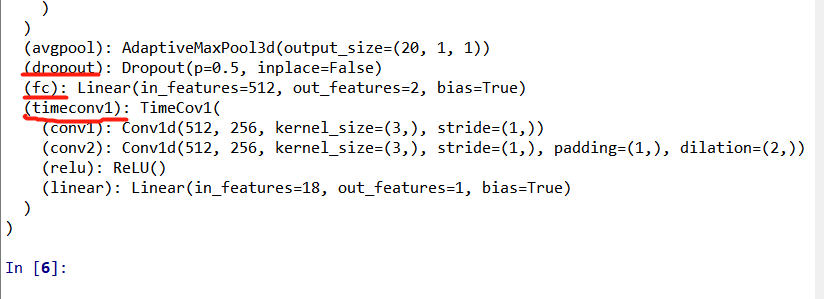

I have used the pytorch for my own neural network, but the model don’t look like what I want it be. It’s architecture not like what I write in the forward() but just like the definition in the init. I don’t know what happens.

Hello,

When you print your model, the nn.Module function __repr__ is called (PyTorch repo). The function basically goes through all the defined modules (done in your __init__ function) to construct a string that you can print. That is the way that it is meant to work, so nothing is wrong with PyTorch and, as defined in your forward function, timeconv1 is applied before dropout  .

.

Thank you! and I also has some question else.

I had say something wrong. When I use the nn.Sequential(*list(model.children())[:-2]) to get some layers and don’t use the last two layers, and it will flows what in the init instead of what I wrote in the forward function, and I don’t know why

model.children() will return all layers sequentially based on their initialization in the __init__.

Since you are wrapping it into an nn.Sequential container, this will be basically the order of execution.

As @beaupreda said, printing the model will also give you the initialized layers, not the actual forward pass.

This is not the case for nn.Sequential modules, as the order of passed modules is kept in the forward pass.

Thank you so much. Now I will try some way else to make it look like what I want it should be.