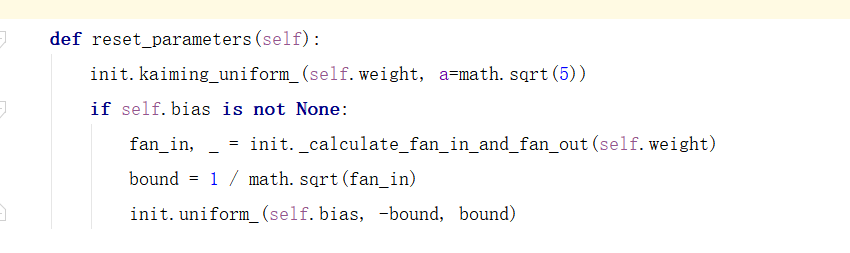

In official documentation, the weights of Linear layer are initialized by the formula:1/in_features , but l find the formula in linear.py is another one ,as show in picture.I want to know why " a=math.sqrt(5)" ,when i choose ReLU as activate function.WHY?

The initialization done is same as in the docs. The implementation simply uses the kaiming_uniform_ function (torch.nn.init — PyTorch 1.7.0 documentation) and passes the right parameter to get a uniform distribution in (-1/sqrt(in_features),1/sqrt(in_features)) . In Kaiming uniform initialization, there is an extra sqrt(3). So they’ve made the gain value 1/sqrt(3) using

gain = torch.nn.init.calculate_gain(nonlinearity='leaky_relu', param=math.sqrt(5))

Check pytorch/init.py at 4ff1823fac8833d4bdfe333c49fd486143175395 · pytorch/pytorch · GitHub.

Seems like a workaround to avoid writing another function that might duplicate code in kaiming_uniform_

I can understand the operation of initializing weights. What i can’t understand is that in docs( torch.nn.linear) it says the weights of Linear layer are initialized by the formula:1/in_features. But in linear.py, it use kaiming_uniform_,which youcan find in this link :https://github.com/pytorch/pytorch/blob/4ff1823fac8833d4bdfe333c49fd486143175395/torch/nn/modules/linear.py#L35

And here’s the weirdest thing for me is the function init.kaiming_uniform_(self.weight, a=math.sqrt(5)),which means the weights for the full connection layer are initialized by default using the formula corresponding to LeakyRelu.I want to know why it choose a=math.sqrt(5) instead of the negative_slope chosed by user.

Hey. I realise now that my answer wasn’t clear earlier. They’ve used the Kaiming uniform initialisation, yes but they’ve chosen the parameter a such that gain is set to be 1/sqrt(3). The way they’ve done that is using the calculate_gain function (pytorch/init.py at 4ff1823fac8833d4bdfe333c49fd486143175395 · pytorch/pytorch · GitHub). With nonlinearity=leaky_relu, the gain factor is math.sqrt(2.0 / (1 + param ** 2)). Choose a=param=math.sqrt(5). Then the gain factor is 1/sqrt(3).

Maybe I didn’t make my question clear. I have understood the operation of Kaiming initialization. What I don’t understand is why when i use ReLu as the activation function , the full connection layer uses the default scheme: init.kaiming_uniform_(self.weight, a=math.sqrt(5))to initialize its weights, which is different from its docs and l have check its resnet.py(vision/resnet.py at 146dd8556c4f3b2ad21fad9312f4602cd049d6da · pytorch/vision · GitHub), in which ReLu is used as activation function, but FC is initialied by deauflt, too.

I think the initialization parameter a should be set to 0 when ReLu is used as activation function, but now it is sqrt(5),which shoulid be used in LeakyReLU.

init.kaiming_uniform_(self.weight, a=math.sqrt(5)) in Linear.py.Check it in:pytorch/linear.py at 4ff1823fac8833d4bdfe333c49fd486143175395 · pytorch/pytorch · GitHub

Linear class has no knowledge about activations, so this reset_parameters function had to be universal. It is also invoked from the constructor, thus it has no arguments, you’re supposed to manually re-initialize parameters after construction if defaults don’t work well.

As @googlebot mentions, this initialization is universal, invoked in the constructor of any linear layer. The nonlinearity argument passed to the kaiming_uniform_ function is not based on the nonlinearity that follows the Linear layer. The whole idea is to use the kaiming_uniform_ function to the get the uniform initialization we want, by passing the right set of arguments (a and nonlinearity).

You can maybe think about this as PyTorch devs trying to avoid writing any code that duplicates statements in kaiming_uniform_.

Thank you very much for your constant reply. I have understood it.