Hi

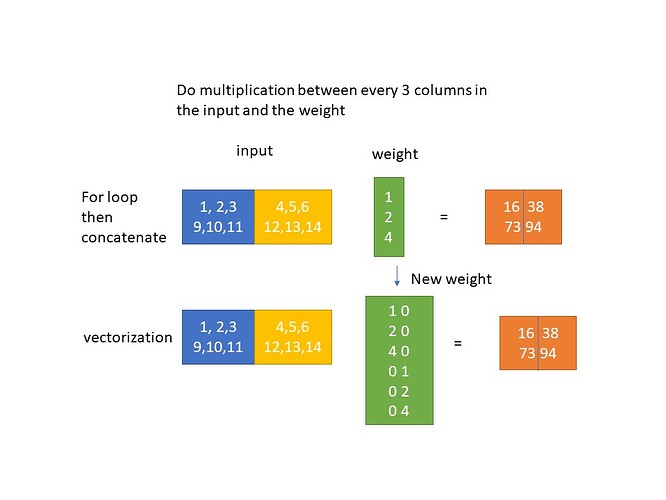

I have an input tensor of n*p. p is equal to k times q, which means in the p columns, every k columns are a group of features.

Meanwhile, I have a weight tensor of k*1. So I use a for loop to do multiplication between every k column of the input and the weight. It is slow.

So I am thinking it is possible to use vectorization to speed up the for loop. I got stuck on how to convert the weight tensor to a p*q tensor. The new weight tensor has a specific pattern (see the following image).

One alternative way is to reshape the input and keep the shape of the weight unchanged. But I need to reshape back the product. So I am still thinking about how to reorganize the weight. Maybe I should use a binary mask.

Does this solve your loop problem ? I guess if (w1,1) goes to (k*w1,k) (here k = 2), it’s hard to avoid a loop.

import numpy as np

w1 = np.asarray([1,2,4])

w1.shape = (3,1)

new_w = np.zeros((2*w1.shape[0],2))

new_w[:w1.shape[0],0] = w1[:,0]

new_w[w1.shape[0]:,1] = w1[:,0]

Thanks for your reply. In your solution, you created a new_w and assign the old way at specific locations. I can do it in this way.

Another concern is that whether I can use SGD to update the original weight after I created a new weight matrix from the original weight.