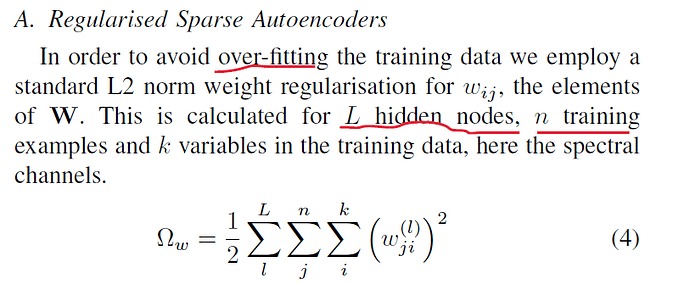

How can I perform this L2 norm weight regularisation in the following VAE network.

class VAE_msi(nn.Module):

def __init__(self, inputsize, latent_dims):

super(VAE_msi, self).__init__()

self.N = torch.distributions.Normal(0, 1)

self.layer_en1 = self.layer_(inputsize, 512)

self.layer_en_xtra = self.layer_(512, 512)

self.layer_en2 = self.layer_(512, latent_dims)

self.layer_en3 = self.layer_(512, latent_dims)

self.layer_de1 = self.layer_(latent_dims, 512)

self.layer_de_xtra = self.layer_(512, 512)

self.layer_de2 = self.layer_(512, inputsize, relu=False)

self.N.loc = self.N.loc.to(device) # hack to get sampling on the GPU

self.N.scale = self.N.scale.to(device)

self.dropout = nn.Dropout(0.5)

# self.kl = 0

def layer_(self, inputsize, outputsize, relu=True):

if relu:

layer = nn.Sequential(

nn.Linear(in_features=inputsize, out_features=outputsize),

nn.BatchNorm1d(num_features=outputsize),

nn.ReLU()

)

else:

layer = nn.Sequential(

nn.Linear(in_features=inputsize, out_features=outputsize),

nn.BatchNorm1d(num_features=outputsize)

)

return layer

def forward(self, spec):

# ------- encoder -------#

spec = self.dropout(spec)

spec = self.layer_en1(spec)

spec = self.layer_en_xtra(spec)

mu = self.layer_en2(spec)

sigma = self.layer_en3(spec)

std = torch.exp(0.5 * sigma)

eps = torch.randn_like(std)

z = mu + eps * std

# ------- decoder -------#

z = self.dropout(z)

z = self.layer_de1(z)

z = self.layer_de_xtra(z)

spec_hat = torch.sigmoid(self.layer_de2(z))

return spec_hat, mu, sigma

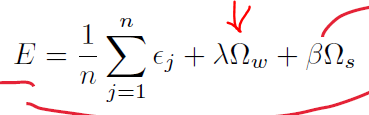

How do I include this weight regularisation in the cost function as: