@mrshenli i face similar issue. when i run on 1 machine or 1 cloud platform like azure, init_rpc runs fine. which means all nodes are on same subnet. but if i run server (rank0) on 1 cloud platform and rank1 on different cloud platform. it is throwing an exception “RuntimeError: Gloo connectFullMesh failed with Connection reset by peer” . iam able to ping server from worker fine and vice versa. i even tried to tunnel both connections to a vpn server but same error. how do i solve this?

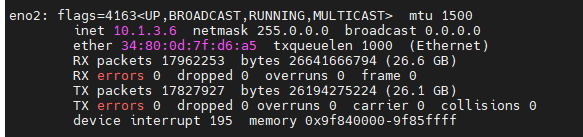

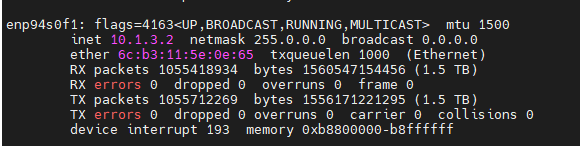

rank0

import torch

import torch.distributed.rpc as rpc

import os

os.environ[‘MASTER_ADDR’] = ‘100.8.0.5’

os.environ[‘MASTER_PORT’] = ‘3332’

rpc.init_rpc(“worker0”, rank=0, world_size=2)

ret = rpc.rpc_sync(“worker1”, torch.add, args=(torch.ones(2), 3))

rpc.shutdown()

#############rank1##########

import os

print(os.environ.get(‘GLOO_SOCKET_IFNAME’))

os.environ[‘MASTER_ADDR’] = ‘100.8.0.5’

os.environ[‘MASTER_PORT’] = ‘3332’

#os.environ[‘GLOO_SOCKET_IFNAME’]=‘nonexist’

#print(os.environ.get(‘GLOO_SOCKET_IFNAME’))

import torch.distributed.rpc as rpc

rpc.init_rpc(“worker1”, rank=1, world_size=2)

rpc.shutdown()

********error

[E ProcessGroupGloo.cpp:138] Gloo connectFullMesh failed with […/third_party/gloo/gloo/transport/tcp/pair.cc:144] no error

Traceback (most recent call last):

File “/home/ubuntu/h_test.py”, line 13, in

rpc.init_rpc(“worker1”, rank=1, world_size=2)

File “/home/ubuntu/.local/lib/python3.10/site-packages/torch/distributed/rpc/init.py”, line 200, in init_rpc

_init_rpc_backend(backend, store, name, rank, world_size, rpc_backend_options)

File “/home/ubuntu/.local/lib/python3.10/site-packages/torch/distributed/rpc/init.py”, line 233, in _init_rpc_backend

rpc_agent = backend_registry.init_backend(

File “/home/ubuntu/.local/lib/python3.10/site-packages/torch/distributed/rpc/backend_registry.py”, line 104, in init_backend

return backend.value.init_backend_handler(*args, **kwargs)

File “/home/ubuntu/.local/lib/python3.10/site-packages/torch/distributed/rpc/backend_registry.py”, line 324, in _tensorpipe_init_backend_handler

group = _init_process_group(store, rank, world_size)

File “/home/ubuntu/.local/lib/python3.10/site-packages/torch/distributed/rpc/backend_registry.py”, line 112, in _init_process_group

group = dist.ProcessGroupGloo(store, rank, world_size, process_group_timeout)

RuntimeError: Gloo connectFullMesh failed with […/third_party/gloo/gloo/transport/tcp/pair.cc:144] no error