Hello, I have strange behavior on the neural network output when I load custom images and test them through the neural network. I am supposed to get one vector with number of predictions which is the same as number of classes, but I got multiple vectors outputs for one image. I have neural network that has 10 classes and is trained on CIFAR10 dataset.

This is the output of the last layer of neural network for two images.

C:\ProgramData\Anaconda3\envs\env3\python.exe C:/Users/Dejana/Box/Research/Projects/OOD-Activations/Test/test2.py

torch.Size([1, 3, 64, 64])

tensor([[ 2.0437, -1.3588, 1.1609, 0.8730, 0.2099, -2.9088, -0.6814, 1.2066,

1.0654, -1.7905],

[ 4.6609, -0.2636, 0.1245, 1.5712, 0.3961, -0.7396, -3.2168, 2.9341,

-3.2016, -2.0086],

[-0.5989, -2.4766, 0.7316, 3.6030, -0.0383, 2.3087, 3.0540, -3.0784,

-0.5181, -3.2308],

[-0.5472, -8.9718, -1.1429, 5.1866, 3.1074, 4.4514, 1.0680, 3.5349,

-2.6262, -5.3872]], grad_fn=<AddmmBackward>)

tensor([[ 1.4245, -4.3988, 2.3064, 4.1217, 1.0324, 0.1579, -1.8399, 0.2804,

1.5780, -4.6716],

[ 0.7175, -2.7187, 4.3732, 1.6181, -0.5130, 0.1256, -0.5808, 1.7235,

-3.3944, -1.6412],

[-1.0284, 1.4488, 1.8022, 3.1178, -2.2113, 0.8049, 2.2102, 4.5608,

-5.7092, -5.1130],

[ 1.2146, -8.5995, 0.8800, 10.9451, 0.4852, 0.7585, 0.8278, 3.1131,

-7.2019, -2.5458]], grad_fn=<AddmmBackward>)

Please see code. I used two different ways to obtain data through the dataloader.

import matplotlib.pyplot as plt

import torch

import torchvision

import torchvision.transforms as transforms

import Utils.densenetBlock as db

transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

#testset = torchvision.datasets.CIFAR10(root='./data', train=False, download=True, transform=transform)

#testloader = torch.utils.data.DataLoader(testset, batch_size=1, shuffle=False, num_workers=0)

testset = torchvision.datasets.ImageFolder(root=r"..\Data\tiny-imagenet-10000\t1", transform=transform)

testloader = torch.utils.data.DataLoader(testset, batch_size=1, shuffle=False, num_workers=0)

net = db.DenseNet3(100, 10)

net.load_state_dict(torch.load('..\Data\densenet10.pth', map_location=torch.device('cpu')), strict=False)

net.eval()

#first_way

i=0

for data in testloader:

inputs, labels = data[0], data[1]

output = net(inputs)

print(output)

i = i + 1

###second way

dataiter = iter(testloader)

lst = [x for i, x in enumerate(dataiter) if i % 9 < 500]

lstiter = iter(lst)

j = 0

while j < 2:

images1, labels1 = next(lstiter)

outputs = net(images1)

print(outputs)

j = j + 1

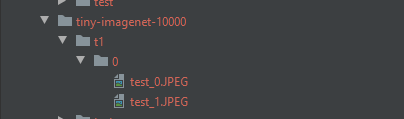

The datasets I am trying to test against my neural network are: Tiny-ImageNet (structure for testing purposes shown on image) and ImageNet.

When I am loading CIFAR10 images, the output seems legit:

torch.Size([1, 3, 32, 32])

tensor([[ -3.7279, -7.4436, -5.9792, 40.7376, -8.7128, 9.2873, 4.3494,

-11.3954, -3.0350, -14.5300]], grad_fn=<AddmmBackward>)

Thank you.