I’m write some short script of Conv2d, and got a strange result.

import torch

import torch.nn as nn

import numpy as np

torch.random.manual_seed(1)

a = [1 ** i for i in range(9)]

npx = np.array(a, dtype=np.float32).reshape(1, 1, 3, 3)

t = torch.FloatTensor(npx)

c = nn.Conv2d(1, 1, 1)

c.weight = nn.Parameter(((c.weight > 0) >= 0).float())

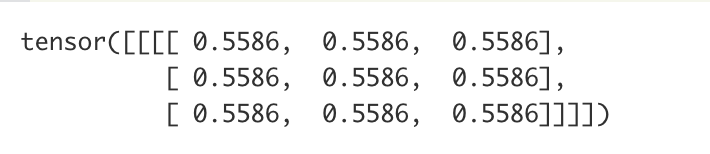

then I get the output of c(t):

where I expect all of the elements should be 1.

The result changes according to the torch.random.manual_seed, and it happens in pytorch 0.4.0 and 1.0

Did I misunderstand something ?

Thanks!