I’m new to ML and PyTorch in particular so might’ve missed something but please help me understand what’s happening.

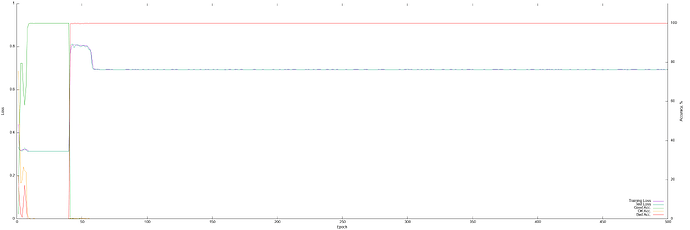

I have a simple model. It learns pretty well and pretty quickly reaches 100% accuracy but in the span of just two epochs it completely deteriorates. Loss shoots way up and accuracy becomes 0%. I’ve seen spikiness in my previous attempts and elsewhere on the internet. I’ve also seen a few question about this on StackOverflow and here on this forum but I haven’t seen an explanation of the phenomenon, at least not a one I can understand yet.

I have a Sequential model. I use SoftMarginLoss (I also tried MSELoss with about the same result) for loss function and Adam for optimiser. I have about 65k data points in total. I randomly select a quarter of the dataset for training in each epoch and 1000 points for testing.

This is a plot of Loss and Accuracy. As you can see, on epoch 41 accuracy tanks to 0 and loss shoots up to 0.8, higher even than the first epoch. It recovers to 0.69 but stays there for a long time. Can someone please explain what’s going on here?