class Net(nn.Module):

def __init__(self, input_size, hidden_1_size, hidden_2_size, output_size, mask_one, mask_two):

super(Net, self).__init__()

self.fc1 = nn.Linear(input_size, hidden_1_size, bias=True)

self.fc2 = nn.Linear(hidden_1_size, hidden_2_size)

self.fc3 = nn.Linear(hidden_2_size, output_size)

final_layer_weights = torch.ones(size=(1,hidden_2_size), requires_grad=False) * (1/hidden_2_size)

with torch.no_grad():

self.fc1.weight.mul_(mask_one)

self.fc2.weight.mul_(mask_two)

self.fc3.weight = nn.Parameter(final_layer_weights)

def forward(self, x):

x = self.fc1(x)

x = self.fc2(x)

x = self.fc3(x)

return x

# Parameters

n_inputs = 5

n_hidden_1 = 5

n_hidden_2 = 4

n_output = 1 # OUTPUT

mask1 = torch.tensor([[1,0,0,0,0],[0,1,0,0,0],[0,0,1,0,0],[0,0,0,1,0],[0,0,0,0,1]])

mask2 = torch.tensor([[1,1,0,0,1],[0,1,1,0,1],[0,0,1,1,1],[1,0,0,1,1]])

model = Net(n_inputs, n_hidden_1, n_hidden_2, n_output, mask1, mask2)

model.fc3.requires_grad_(False)

for epoch in range(n_epochs):

optimizer.zero_grad()

# forward pass and loss

y_pred = model(data)

# print(y_pred)

loss = criterion(y_pred, target)

# backward pass

loss.backward()

# print()

# # Zero out gradients

with torch.no_grad():

model.fc1.weight.grad.mul_(mask1)

model.fc2.weight.grad.mul_(mask2)

# update

optimizer.step()

with torch.no_grad():

model.fc1.weight.clamp_(min=0)

if (epoch+1) % 1 == 0:

print(f'epoch: {epoch+1}, loss = {loss.item():.4f}')

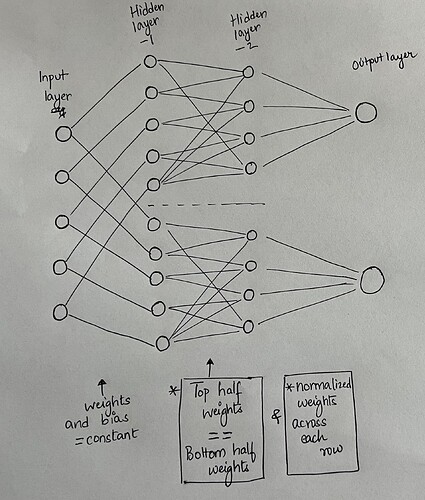

I have the above simple NN but I have the following constraints.

- I need the weights in fc1 to be all positive.

- I need the weight in fc2 to be all positive and sum of weights in each row equal to 1

I have realized that using clamp I can update the weights in fc1. However I am unable to complete the step 2 correctly.

Any help is appreciated. Thank you.