So i am doing some new work on the Forward Forward algorithm using ConvNet, when performing prediction i will need to sum up the goodness score for each layer, the problem is each of this scores have different dimension since the Convolution operation has been performed on the data, here is my code sample.

def predict(self, x):

goodness_score_per_label = []

for label in range(self.output_dim):

# perform one hot encoding#

print('label:', label, x.shape)

encoded = overlay_y_on_x(x, label)

goodness = []

for idx, layer in enumerate(self.layers):

encoded = layer(encoded)

print('encoded:', encoded.shape)

goodness += [encoded.pow(2).mean(1)]

print('goodness:', len(goodness), goodness[idx].shape)

goodness_score_per_label += [sum(goodness).unsqueeze(1)]

goodness_score_per_label = torch.cat(goodness_score_per_label, 1)

return goodness_score_per_label.argmax(1)

here is the tensor dimension,

encoded: torch.Size([50000, 6, 14, 14])

goodness: 1 torch.Size([50000, 14, 14])

encoded: torch.Size([50000, 16, 7, 7])

goodness: 2 torch.Size([50000, 7, 7])

encoded: torch.Size([50000, 120, 3, 3])

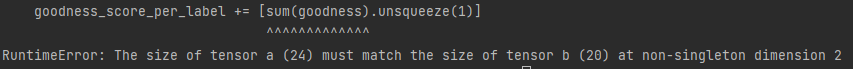

i am getting the error from this line goodness_score_per_label += [sum(goodness).unsqueeze(1)] what is the best way of handling this problem?