Is there ongoing work to try to bring PyTorch support for AMD gpus?

Hi,

We already do.

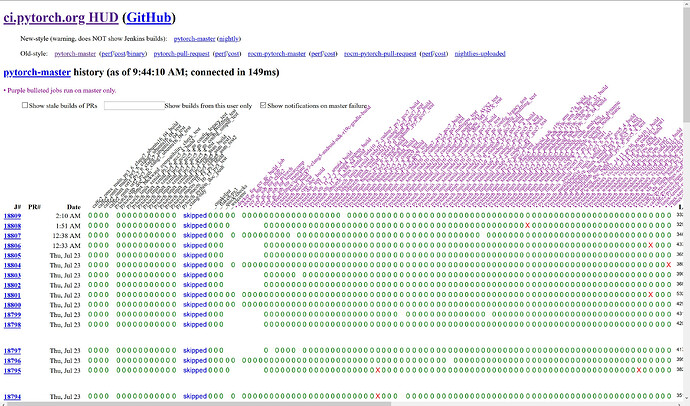

You can see the corresponding “rocm” builds in our CI that make sure it all works in our CI hud.

I have an pc with a Nvidia gpu but I am looking into building an app that can run in multiple pcs and a server that I have at home with an amd gpu. When visiting the site that you mentioned, I got the following site. Is this correct?

Yes, each column is a different build we test for. You can see in them all the “rocm” builds.

That being said. I don’t know if and how you can build a single app that will run on all these devices. I think you have to specify cuda, rocm or nothing at build time.

Right now, I have support for cpu, cuda and mixed precision by searching for the user’s devices and picking the best one when the app loads. After I send it to the appropriate device and added a few if statement for amp.

The type of app that I am referring to is one that I can send models to edge-computers, train them and then send them back. So, a type of interface that can allow that without having to go through ssh or something similar. A client and server framework.

So, for rocm, does one have to have a specific build depending on the gpu that one has?

I also a bit confused with the set up of the site. How does one download the build?

You can choose which GPU archs you want to support by providing a comma separated list at build-time (I have instructions for building for ROCm on my blog) or use an the AMD-provided packages with broad support).

Good to know. Thanks

Thanks for your help

It is quite sad, Pytorch most preferred to Nvidia GPU.There is monopoly of Nvidia in market, on other hand, poor people cant afford because of Expensive monopoly cost. On other hand , for poor beginners, Building and making to support is a intense pain.

As I used to prefer opensource software, but Nvidia gpu drivers dont really much support opensource, leaving our privacy to their hands.

[ hatered toward nvidia doesnt mean hate to our moderators, but i love them]

Completely agree with you about Nvidia’s monopoly. I had to spend $500 on a Nvidia gpu for a new desktop; it being the most expensive part of the built. I would argue that a gpu should cost less than a cpu based on the functionalities and performance offered in comparison. However, often GPUs cost 3 to 5 times what a cpu would cost. Crazy! Nvidia is extremely greedy

I am grateful for the people from Nvidia here. They have helped me a lot. I am referring to the company policies, not the employees

Hi,

I agree with you that the support is not equivalent for both platforms unfortunately.

But to give some historical context, even back in the torch7 days (whose backend was the base of pytorch), when the number of devs wasn’t much more than 5 people, there were nvidia engineers working on adding kernels and cuda support to the lib. So I think it is natural that the nvidia support is better as we just didn’t have enough people on the project to add similar support for rocm (IIRC there is an opensource extention that was adding support for it developped by a couple other people).

That being said, now that we have more people, we do try to add more support for amd gpus (new CI, new binaries, etc). So hopefully the experience shouldn’t be as bad as it used to be.

But don’t hesitate to raise up issues for things that are missing on this side.