Code to produce these results:

import torch

import torch.nn.functional as F

import imageio

def fill_center(img, s):

n, rows, cols = img.shape

s //= 2

c_row = rows // 2

c_col = cols // 2

img[:, c_row - s:c_row + s, c_col - s:c_col + s] = 1

return img

def rot_mat(angles):

coss = torch.cos(angles).unsqueeze(1)

sins = torch.sin(angles).unsqueeze(1)

rotations = torch.stack((torch.cat((coss, -sins), dim=1),

torch.cat((sins, coss), dim=1)), dim=2) # n_images, 2, 2

return rotations

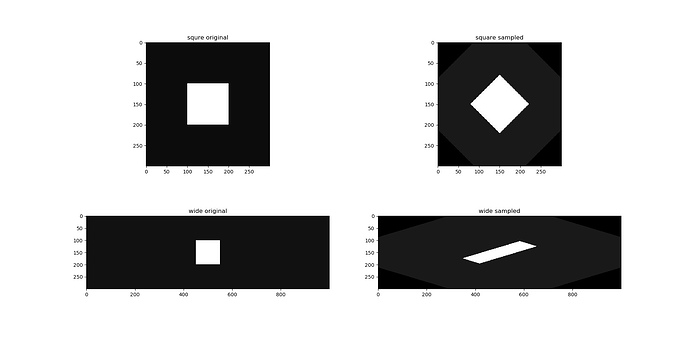

shapes = [[300, 300], [300, 1000]]

names = ["square", "wide"]

n_images = 2

parameters = torch.zeros((n_images, 3))

parameters[1, 0] = 3.1415 / 4

for shape, name in zip(shapes, names):

orig_image = torch.zeros((1, *shape)) + 0.1

orig_image = fill_center(orig_image, 100)

orig_image = orig_image.expand(n_images, *orig_image.shape)

theta = torch.cat((rot_mat(parameters[:, 0]), parameters[:, 1:, None]), dim=-1)

scale = 1

n, c, rows, cols = orig_image.shape

sample_grid = F.affine_grid(theta, (n_images, 1, int(rows * scale), int(cols * scale)), align_corners=False).to(orig_image.device)

images = F.grid_sample(orig_image, sample_grid, align_corners=False)

images *= 255

images = images.permute(0, 2, 3, 1).to("cpu", torch.uint8)

for i in range(n_images):

imageio.imsave(f"{name}_{i:0>3}.jpg", images[i, ...])