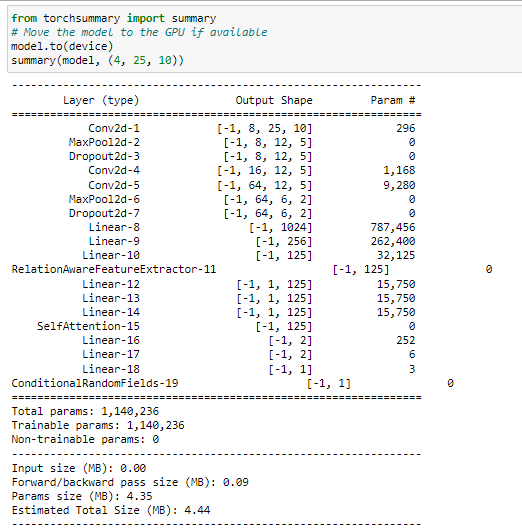

I’m getting Target 1 is out of bounds error and not able to debug the error. Can anyone please help me out with this error. I have also added a snapshot of my model summary.

My code :

# Define the loss function (negative log-likelihood)

criterion = nn.NLLLoss()

# Define the optimizer

optimizer = torch.optim.SGD(model.parameters(), lr=base_learning_rate, momentum=momentum, weight_decay=weight_decay)

# Initialize lists to store accuracy values

train_accuracy_history = []

# Training loop

train_loss_history = []

verbose = True

l2_lambda = 0.0001

for epoch in range(num_epochs):

model.train()

train_loss = 0.0

correct = 0

total = 0

for batch_data, batch_labels in train_loader:

optimizer.zero_grad()

# Forward pass

output = model(batch_data)

batch_labels = batch_labels.view(-1)

# Compute loss

ce_loss = criterion(output, batch_labels.long())

l2_reg = 0.0

for param in model.parameters():

l2_reg += torch.norm(param, p=2)

# Combine the cross-entropy loss and L2 regularization term

loss = ce_loss + l2_lambda * l2_reg

# Backward pass and optimization

loss.backward()

optimizer.step()

train_loss += loss.item()

_, predicted = torch.max(output.data, 1)

total += batch_labels.size(0)

correct += (predicted == batch_labels).sum().item()

train_loss /= len(train_loader)

accuracy = correct / total

train_accuracy_history.append(accuracy)

train_loss_history.append(train_loss)

if verbose:

print(f"\nEpoch [{epoch+1}/{num_epochs}] | Train Loss: {train_loss:.4f} | Train Accuracy: {accuracy:.2f}")

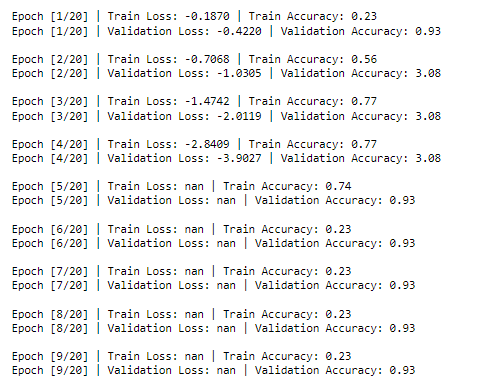

My error:

---------------------------------------------------------------------------

IndexError Traceback (most recent call last)

Input In [29], in <cell line: 14>()

26 batch_labels = batch_labels.view(-1)

28 # Compute loss

---> 29 ce_loss = criterion(output, batch_labels.long())

30 #ce_loss = criterion(output, batch_labels)

32 l2_reg = 0.0

File ~\anaconda3\lib\site-packages\torch\nn\modules\module.py:1130, in Module._call_impl(self, *input, **kwargs)

1126 # If we don't have any hooks, we want to skip the rest of the logic in

1127 # this function, and just call forward.

1128 if not (self._backward_hooks or self._forward_hooks or self._forward_pre_hooks or _global_backward_hooks

1129 or _global_forward_hooks or _global_forward_pre_hooks):

-> 1130 return forward_call(*input, **kwargs)

1131 # Do not call functions when jit is used

1132 full_backward_hooks, non_full_backward_hooks = [], []

File ~\anaconda3\lib\site-packages\torch\nn\modules\loss.py:211, in NLLLoss.forward(self, input, target)

210 def forward(self, input: Tensor, target: Tensor) -> Tensor:

--> 211 return F.nll_loss(input, target, weight=self.weight, ignore_index=self.ignore_index, reduction=self.reduction)

File ~\anaconda3\lib\site-packages\torch\nn\functional.py:2689, in nll_loss(input, target, weight, size_average, ignore_index, reduce, reduction)

2687 if size_average is not None or reduce is not None:

2688 reduction = _Reduction.legacy_get_string(size_average, reduce)

-> 2689 return torch._C._nn.nll_loss_nd(input, target, weight, _Reduction.get_enum(reduction), ignore_index)

IndexError: Target 1 is out of bounds.