There is a Seq2Seq prediction problem, and the task is to predicit a time-series data y from time-series data x,z1,z2,z3.

The lengths of squences of x,z1,z2,z3 and y are all 100.

There is no problem in the dataset, because I have done the prediction work in the traditional RNN form based on the encoder-decoder model.

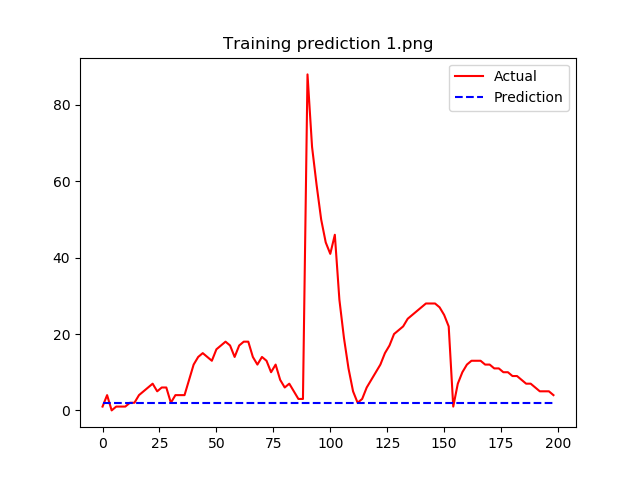

But when I turned to the TCN model, the training loss didn’t go down. And the most annoying thing is that the predition is always 0! Each time and each batch, all predictions are zero.

Can somebody help me? thanks you very much!

> import os

> import time

> import torch

> import numpy as np

> from torch import nn

> import torch.utils.data as Data

> from torch.nn.utils import weight_norm

> from sklearn.metrics import r2_score

> import matplotlib.pyplot as plt

>

> Inj_meas = 1

> Injuries = ['Acc', 'Cdisp','NForce', 'NMoment']

> inj = Injuries[Inj_meas - 1]

>

> start_time = time.time()

> X_train = torch.from_numpy(np.load('PROCESSED_DATA\X_train' + inj + '.npy')).long().data.cuda() #[3500*8*0.8,100]

> y_train = torch.from_numpy(np.load('PROCESSED_DATA\y_train' + inj + '.npy')-1000).long().data.cuda() #[3500*8*0.8,100] (max=533)

> z1_train = torch.from_numpy(np.load('PROCESSED_DATA\z1_train' + inj + '.npy')/500).long().data.cuda() #[3500*8*0.8,100]

> z2_train = torch.from_numpy(np.load('PROCESSED_DATA\z2_train' + inj + '.npy')/100).long().data.cuda() #[3500*8*0.8,100]

> z3_train = torch.from_numpy(np.load('PROCESSED_DATA\z3_train' + inj + '.npy')/200).long().data.cuda() #[3500*8*0.8,100]

> print('Loading dataset duration:',time.time() - start_time,'s')

>

> ##########################################################

> class Chomp1d(nn.Module):

> def __init__(self, chomp_size):

> super(Chomp1d, self).__init__()

> self.chomp_size = chomp_size

>

> def forward(self, x):

> return x[:, :, :-self.chomp_size].contiguous()

>

> class TemporalBlock(nn.Module):

> def __init__(self, n_inputs, n_outputs, kernel_size, stride, dilation, padding, dropout=0.2):

> super(TemporalBlock, self).__init__()

> self.conv1 = weight_norm(nn.Conv1d(n_inputs, n_outputs, kernel_size, stride=stride, padding=padding, dilation=dilation))

> self.chomp1 = Chomp1d(padding)

> self.relu1 = nn.ReLU()

> self.dropout1 = nn.Dropout(dropout)

>

> self.conv2 = weight_norm(nn.Conv1d(n_outputs, n_outputs, kernel_size, stride=stride, padding=padding, dilation=dilation))

> self.chomp2 = Chomp1d(padding)

> self.relu2 = nn.ReLU()

> self.dropout2 = nn.Dropout(dropout)

>

> self.net = nn.Sequential(self.conv1, self.chomp1, self.relu1, self.dropout1, self.conv2, self.chomp2, self.relu2, self.dropout2)

> self.downsample = nn.Conv1d(n_inputs, n_outputs, 1) if n_inputs != n_outputs else None

> self.relu = nn.ReLU()

> self.init_weights()

>

> def init_weights(self):

> self.conv1.weight.data.normal_(0, 0.01)

> self.conv2.weight.data.normal_(0, 0.01)

> if self.downsample is not None:

> self.downsample.weight.data.normal_(0, 0.01)

>

> def forward(self, x):

> out = self.net(x)

> res = x if self.downsample is None else self.downsample(x)

> return self.relu(out + res)

>

> class TemporalConvNet(nn.Module):

> def __init__(self, num_inputs, num_channels, kernel_size=2, dropout=0.2):

> super(TemporalConvNet, self).__init__()

> layers = []

> num_levels = len(num_channels)

> for i in range(num_levels):

> dilation_size = 2 ** i

> in_channels = num_inputs if i == 0 else num_channels[i-1]

> out_channels = num_channels[i]

> layers += [TemporalBlock(in_channels, out_channels, kernel_size, stride=1, dilation=dilation_size,

> padding=(kernel_size-1) * dilation_size, dropout=dropout)]

> self.network = nn.Sequential(*layers)

>

> def forward(self, x):

> return self.network(x)

> ##########################################################

> class TCN(nn.Module):

> def __init__(self, embed_size, inputFeat_size, output_size, num_channels, kernel_size=2, dropout=0.3, emb_dropout=0.1):

> super(TCN, self).__init__()

> self.encoder_x = nn.Embedding(output_size, embed_size)

> self.encoder_z1 = nn.Embedding(inputFeat_size, embed_size)

> self.encoder_z2 = nn.Embedding(inputFeat_size, embed_size)

> self.encoder_z3 = nn.Embedding(inputFeat_size, embed_size)

> self.tcn = TemporalConvNet(embed_size, num_channels, kernel_size, dropout=dropout)

> self.decoder = nn.Linear(num_channels[-1], output_size)

> self.drop = nn.Dropout(emb_dropout)

> self.emb_dropout = emb_dropout

> self.init_weights()

>

> def init_weights(self):

> self.encoder_x.weight.data.normal_(0, 0.01)

> self.encoder_z1.weight.data.normal_(0, 0.01)

> self.encoder_z2.weight.data.normal_(0, 0.01)

> self.encoder_z3.weight.data.normal_(0, 0.01)

> self.decoder.bias.data.fill_(0)

> self.decoder.weight.data.normal_(0, 0.01)

>

> def forward(self, x, z1, z2, z3):

> """Input ought to have dimension (N, C_in, L_in), where L_in is the seq_len; here the input is (N, L, C)"""

> emb_x = self.drop(self.encoder_x(x))

> emb_z1 = self.encoder_z1(z1)

> emb_z2 = self.encoder_z2(z2)

> emb_z3 = self.encoder_z3(z3)

> emb_extended = emb_x + emb_z1 + emb_z2 + emb_z3

> y = self.tcn(emb_extended.transpose(1, 2)).transpose(1, 2)

> y = self.decoder(y)

> return y.contiguous()

> ##########################################################

> Batch_size = 64

> Emb_size = 64

> InputFeat_size = 2

> Hidden_size = 128

> Level_Size = 5

> Num_Chans = [Hidden_size] * (Level_Size - 1) + [Emb_size]

> Output_Size = 1000

> K_size = 3

> CNN_dropout = 0.0

> Emb_dropout = 0.0

> Clip = 0.35

> Learning_rate = 0.01

> Epochs = 10

> ##########################################################

> dataset = Data.TensorDataset(X_train, z1_train, z2_train, z3_train, y_train)

> loader = Data.DataLoader(dataset=dataset, batch_size=Batch_size, shuffle=True)

> ##########################################################

> model = TCN(Emb_size, InputFeat_size, Output_Size, Num_Chans, dropout=CNN_dropout, emb_dropout=Emb_dropout, kernel_size=K_size).cuda()

> optimizer = torch.optim.Adam(model.parameters(), lr= Learning_rate)

> scheduler_lr = torch.optim.lr_scheduler.MultiStepLR(optimizer, [5,18,45], gamma=0.3, last_epoch=-1)

> criterion = nn.CrossEntropyLoss().cuda()

>

> LossCurve = []

> R2Curve = []

>

> for epoch in range(Epochs):

> epoch_start_time = time.time()

> loss_batch = []

> model.train()

> for step, (batch_x, batch_z1, batch_z2, batch_z3, batch_y) in enumerate(loader): # batch_x.shape = batch_z1.shape =[Bat_S, 100]

> prediction = model(batch_x, batch_z1, batch_z2, batch_z3) #[Bat_S, 100, 1000]

> loss = criterion(prediction.reshape(-1, Output_Size), batch_y.reshape(-1)) # batch_y.shape = [Bat_S, 100]

> loss.backward()

> optimizer.step()

> loss_batch.append(loss.detach().data.cpu().numpy())

> if step % 10 == 0:

> print('Epoch: ', epoch+1, '/', Epochs,'| Step:', step, '/', len(loader),'| Batch_Loss:', loss.detach().data.cpu().numpy())

> if step == 50:

> break

>

> loss = np.mean(loss_batch)

> print('Epoch: ', epoch + 1, '/', Epochs, '| Loss:', loss, '| Epoch duration:', time.time() - epoch_start_time, 's | Lr:',

> optimizer.state_dict()['param_groups'][0]['lr'])

> Num = 0

> Real = (batch_y[Num]).data.cpu().numpy()

> Pred = (prediction[Num].data.max(1)[1]).data.cpu().numpy()

> plt.plot(np.arange(0, 200, 2), Real, 'r', label='Actual')

> plt.plot(np.arange(0, 200, 2), Pred, 'b--', label='Prediction')

> plt.title('Training prediction %d.png' % (epoch + 1))

> R22 = r2_score(Real, Pred)

> plt.legend()

> LossCurve.append(loss)

> R2Curve.append(round(R22, 1))

> plt.savefig(os.path.join('Prediction_IMAGE', 'Training prediction %d.png' % (epoch + 1)))

> plt.close()

>

> plt.plot(LossCurve, label='Loss')

> plt.plot(R2Curve, label='R2 sample')

> plt.title('Training Progress')

> plt.legend()

> plt.savefig(os.path.join('Prediction_IMAGE', 'Loss and R2.png'))

> plt.close()