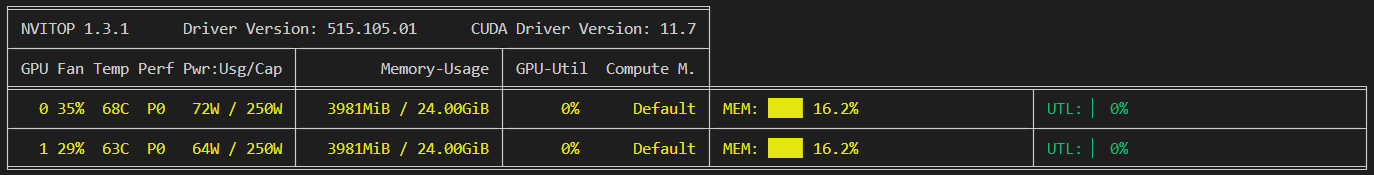

I am having a strange issue with pytorch on a machine that has 2 GPUs. When I run the following code with VRAM of both GPUs empty:

Python 3.11.6 | packaged by conda-forge | (main, Oct 3 2023, 10:40:35) [GCC 12.3.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import torch

>>> dev = torch.device('cuda:0')

>>> a = torch.rand(1000,1000,1000, device=dev)

The ‘a’ tensor gets copied over to both GPUs even though I explicitly piped it to GPU #0.

However, the same thing doesn’t happen on another system with similar hardware with the same code.

Specs of the faulty setup:

GPU: Quadro P6000 (2x)

$ conda list torch*

# packages in environment at ....:

#

# Name Version Build Channel

pytorch 2.1.0 py3.11_cuda11.8_cudnn8.7.0_0 pytorch

pytorch-cuda 11.8 h7e8668a_5 pytorch

pytorch-mutex 1.0 cuda pytorch

torchaudio 2.1.0 py311_cu118 pytorch

torchtriton 2.1.0 py311 pytorch

torchvision 0.16.0 py311_cu118 pytorch