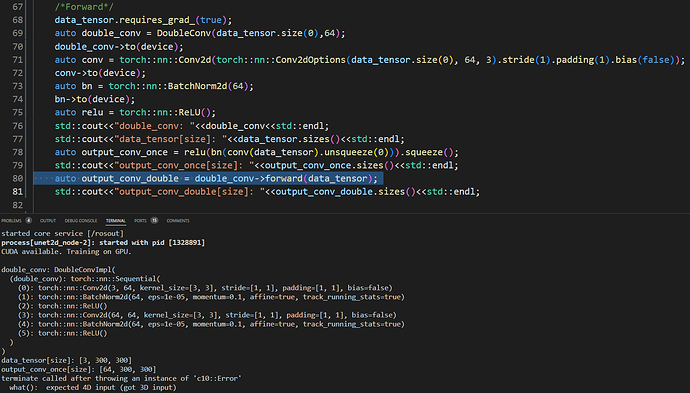

Hello, I’ve encounter Tensor dimension issue when calling forward() on line 80.

line 78 perform conv2d once, It succeed when I manually

unsqueeze() the tensor before

BatchNorm2d.

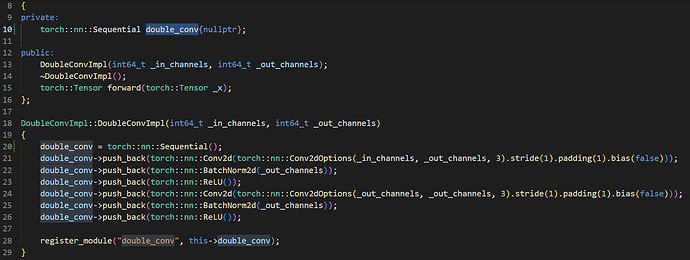

This is the part describe how Sequential will initialize under DoubleConv’s constructor.

Any idea to implement line 78(the unsqueeze) under a Sequential’s initialization?

cc: @ptrblck @Fitanium

Why do you squeeze() the activation tensor after the relu layer?

By default PyTorch expects 4D inputs for 2D layers as [batch_size, channels, height, width]. Newer PyTorch releases accept also 3D inputs and assuming a batch size of 1. However, I don’t know if the C++ frontend supports if too for all layers.

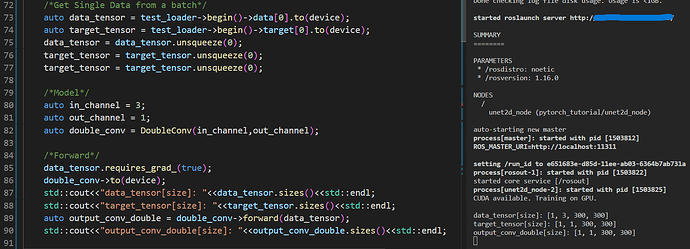

@ptrblck Thanks for your quick reply!!

I’ve change the dimension of data_tensor into 4D(single batch) on line 75,and follow a forward() on line 89.

I got output_conv_double with expected dimension.

Appreciate to have your valuable advise!!

1 Like