Hi, I usually index tensors with lists of indices, like

x = torch.as_tensor([[1,2,3,4,5], [6,7,8,9,0]])

index = [[0, 1, 1], [1, 1, 2]]

# tensor([2, 7, 8])

x[index]

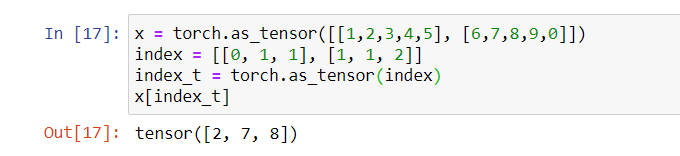

Now I need index to be a tensor object, but doing this, I get an error:

x = torch.as_tensor([[1,2,3,4,5], [6,7,8,9,0]])

index = torch.as_tensor( [[0, 1, 1], [1, 1, 2]])

# IndexError: index 2 is out of bounds for dimension 0 with size 2

x[index]

I don’t know if it is expected to work differently, but a numpy array behaves like the list:

x = torch.as_tensor([[1,2,3,4,5], [6,7,8,9,0]])

index = [[0, 1, 1], [1, 1, 2]]

index_n = numpy.asarray(index)

index_t = torch.as_tensor(index)

# tensor([2, 7, 8])

x[index]

# tensor([2, 7, 8])

x[index_n]

# IndexError: index 2 is out of bounds for dimension 0 with size 2

x[index_t]

How can I get the same output using a tensor instead of a list?