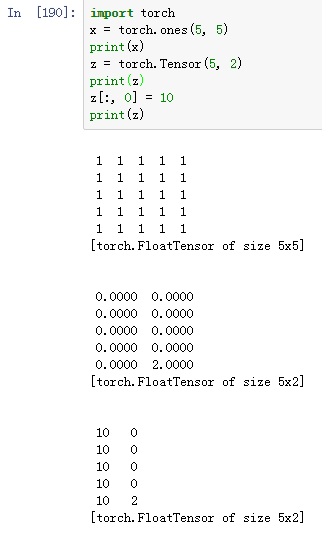

When I randomly create a tensor of specific size using python with torch, it seems that the value of tensor is very small, almost equal to zero. However, today I find a tensor initialized with a value 2? Is it normal? The code I run is as follow.

torch.tensor uses uninitialized memory to create the tensor. Whatever is stored in this memory will be interpreted as a value. If you want to create a random tensor you could use torch.randn etc.