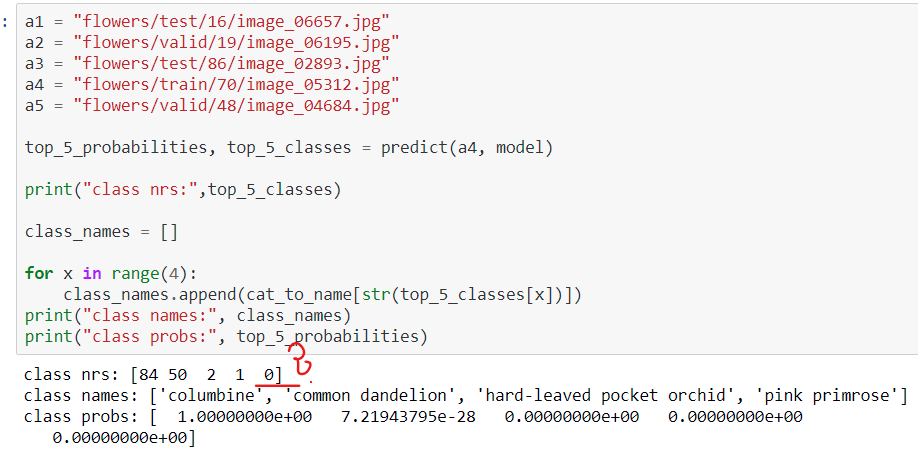

calling tensor.topk should return top_probabilites and top_classes.

This call is returning a class 0 which shouldn’t exist based on category mapping. What am I doing wrong?

def predict(image_path, model):

tens_im = preproc(image_path)

with torch.no_grad():

tens_im = torch.FloatTensor(tens_im)

tens_im.unsqueeze_(0)

probabilities = torch.exp(model.forward(tens_im.to(device)))

top_p, top_class = probabilities.topk(5, dim=1)

top_p = top_p.to("cpu"); top_class = top_class.to("cpu");

top_p = top_p.numpy()[0]; top_class = top_class.numpy()[0]

return top_p, top_class