Hi Folks!

I am using the add_hparams function on the integrated Tensorboard SummaryWriter, which is great.

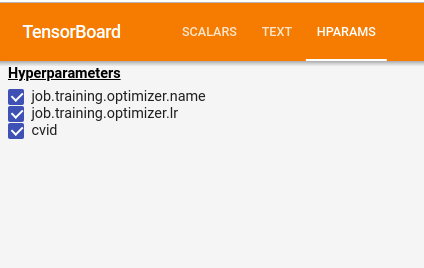

I notice though that in tensorboard that we do not get any control over the hyperparameter values in tensorboard itself. e.g. compare this image

to the screenshot in teh tensorboard docs:

I am assuming that this is due to the simpler implementation here (add_hparams just receives a dict of params when metrics are logged) where there is no prior declaration of the hyperparameter ranges etc that is available when using the hparams and TF direct.

My question is is there any way currently to register those hparams in tensorboard via the pytorch API or otherwise get the same behavior?

or any plans to extend in that direction? ( I see no open issues on github)

cheers!