I know TB is not made by pytorch but I think the issue probably has to do with the Pytorch SummaryWriter side of things.

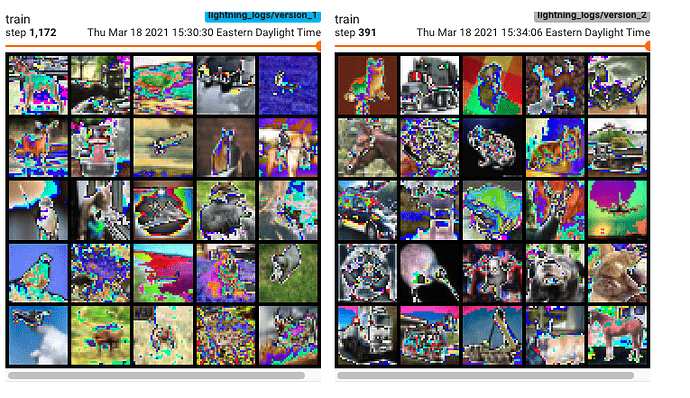

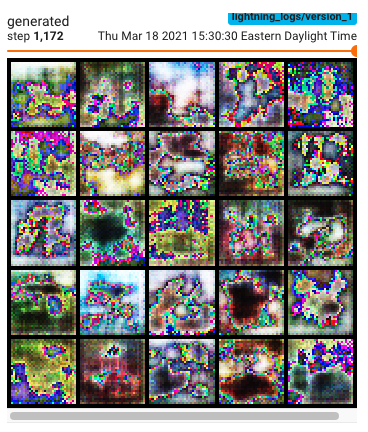

I’ve been trying to train a CIFAR-10 DCGAN and pulling my hair out for a week because the images always come out like this:

Observe the extreme clipping/saturation.

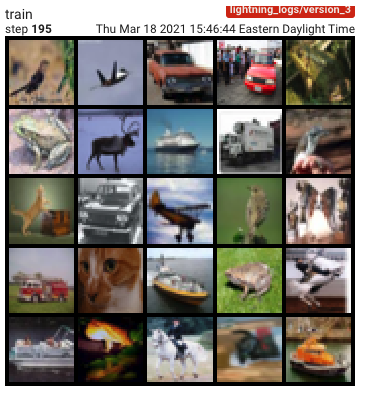

Now, today I decided to write some training data to tensorboard as well and I got this:

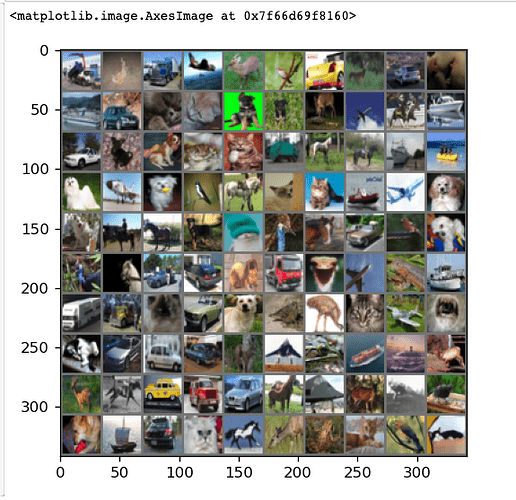

Here is the training data observed with plt.imshow in a Jupyter notebook, which confirms that my data is not loaded incorrectly.

I’m completely stumped. Grayscale images (MNIST) work fine on TB. Does anyone have any insight? This is my code to write the images to tensorboard (I’m using pytorch lightning but that shouldn’t matter):

from torchvision.utils import make_grid

img = pl_module.sample_G(self._n * self._n)

train_batch = next(iter(pl_module.train_dataloader()))[:(self._n * self._n)]

# Concatenate images

grid = make_grid(img, self._n)

t_grid = make_grid(train_batch, self._n)

if pl_module.logger is not None:

pl_module.logger.experiment.add_image('train', t_grid, pl_module.global_step, dataformats='CHW')

pl_module.logger.experiment.add_image('generated', grid, pl_module.global_step, dataformats='CHW')