I’m trying to understand why do we need the Tensor of size N.

Isn’t the 1xN is enough?

Some context might help us understand your question.

But I will say this. If you have a tensor of shape (1, N) you can do my_tensor.view(N) to get a tensor of shape (N,)

coming from MATLAB i’m trying to get the concept.

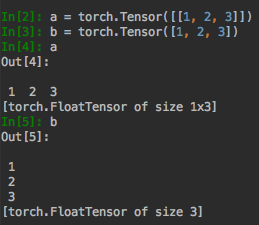

For example:

Now i can do the dot product of a with itself, but can’t do it with b.

In MATLAB you would only have 1x3 and not just size 3.

So what is it good for?

So your question really is: what use is a 1d tensor?

I dunno.

If you wanted to represent something 1 dimensional and didn’t want to deal with storing it in a 2d tensor where one dimension is 1, then you’d use a 1D tensor. 1D tensors are good to have for consistency purposes.

Thanks.

Could you give an example for a case where 1xN tensors would not be good but a size N Tensor would?

Couldn’t one argue the same with 3D tensors though?

Where would a 1x1xN tensor not be good but a 1xN or N tensor would?

This is just a difference of how the language/library works.

In PyTorch you can have 1D, 2D, 3D, ..., nD tensors and you can use whatever you find most convenient.

I dont really know matlab, but you seem to imply matlab only allows 2D,..., nD tensors.

Well, you got a point

What confuses me still is this.

I think of the 1D tensor as a vector (is that right or this is some other kind of animal?)

A 2D tensor (1xN) could be transposed for example. but a 1D couldn’t.

So it’s not a vector in that sense.

You right about MATLAB, didn’t thought of it that way but it only allows 2D,...,nD.

So now my question is why won’t a 1D tensor behave like a vector?

In what ways does it not behave like a vector?

You can matrix multiply a 1D tensor with a 2D tensor:

In [2]: x = torch.randn(3, 3)

In [3]: y = torch.randn(3)

In [4]: x @ y

Out[4]:

0.6492

-2.3366

0.5286

[torch.FloatTensor of size 3]

For example:

a = torch.Tensor([1, 2, 3])

b = torch.Tensor([[1, 2, 3]])

b@b.t()

Out[4]:

14

[torch.FloatTensor of size 1x1]

a@a.t()

Traceback (most recent call last):

File "/Users/omz/anaconda3/lib/python3.6/site-packages/IPython/core/interactiveshell.py", line 2862, in run_code

exec(code_obj, self.user_global_ns, self.user_ns)

File "<ipython-input-5-e27dc62b681a>", line 1, in <module>

a@a.t()

RuntimeError: t() expects a 2D tensor, but self is 1DThis is because PyTorch will not automatically increase the number of dimensions a tensor has.

You would have to do one of the following:

b @ b.view(1,-1).t() # `-1` expands to the number of elements in all existing dimensions (here: [3])

b @ b.expand(1,-1).t() # `-1` means not changing size in that dimension (here: stay at 3)

b @ b.unsqueeze(1) # unsqueeze adds `num` dimensions after existing ones (here 1 dimension)

EDIT: I think view() is the fastest solution, but you would need to benchmark to now for sure.

Yeah so the solution exists.

I’m trying to understand what is the reason of this size N to not behave naturally like a vector.