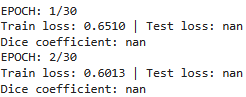

i build unet model to segment diabetic retinopathy lesion, but when i did the training with 30 epochs, it give nan value in test loss and dice coefficient

startTime = time.time()

batch_size= 2

train_dataset = ProcessDataset(train_x, train_y)

test_dataset = ProcessDataset(test_x, test_y)

train_loader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True,

num_workers=os.cpu_count(), pin_memory=True)

test_loader = DataLoader(test_dataset, batch_size=batch_size, shuffle=False,

num_workers=os.cpu_count(), pin_memory=True)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = Build_Unet()

model.to(device)

trainSteps = len(train_dataset) // batch_size

testSteps = len(test_dataset) // batch_size

H = {"train_loss": [], "test_loss": [], "accuracy": [], "dice": []}

criterion = nn.BCEWithLogitsLoss()

criterion.to(device)

optimizer = optim.Adam(model.parameters(), lr=0.001)

num_epochs = 30

for epoch in range(num_epochs):

model.train()

totalTrainLoss = 0

totalTestLoss = 0

for i, (data, target) in enumerate(train_loader):

data, target = data.to(device), target.to(device)

target = target.unsqueeze(1)

output = model(data)

loss = criterion(output, target)

optimizer.zero_grad()

loss.backward()

optimizer.step()

totalTrainLoss += loss

with torch.no_grad():

model.eval()

total_dice = 0

for data, target in test_loader:

data, target = data.to(device), target.to(device)

target = target.unsqueeze(1)

output = model(data)

loss = criterion(output, target)

totalTestLoss += loss

pred = torch.round(output)

dice = compute_meandice(pred, target, include_background=False)

total_dice += dice

avg_dice = total_dice / len(test_loader)

avgTrainLoss = totalTrainLoss / trainSteps

avgTestLoss = totalTestLoss / testSteps

H["train_loss"].append(avgTrainLoss.cpu().detach().numpy())

H["test_loss"].append(avgTestLoss.cpu().detach().numpy())

H["dice"].append(avg_dice)

print("EPOCH: {}/{}".format(epoch + 1, num_epochs))

print("Train loss: {:.4f} | Test loss: {:.4f}".format(

avgTrainLoss, avgTestLoss))

print("Dice coefficient: {:.4f}".format(avg_dice.mean().item()))

endTime = time.time()

print("[INFO] total time taken to train the model: {:.2f}s".format(

endTime - startTime))

here i want to print training loss, test loss, and dice coefficient to see my model performance, but i got stuck in test loss and dice coeficient giving nan value like this

since i’m newbie in pytorch, anyone know how to fix it? or is there something strange in my code? thankyou in adanvance!