111480

August 4, 2021, 7:10am

1

GPU profiling has shown that the kinds of CUDA kernels that run internally are different between python and C++.

Python

models.densenet201(pretrained=True).cuda().eval()

torch.jit.load(“densenet201_model.pt”).cuda().eval()

C++

torch::jit::load(“densenet201_model.pt”);

The above three results and the layers running are the same, but the types and numbers of CUDA kernels running internally are all different.

There were more kernel types running on python.

What’s the reason for this result?

Do you know which kernels were called additionally in Python and were you using the same setup? E.g. in case you were using cudnn’s benchmark mode, different kernels will be profiled for each new input shape, which would also show up in the profile.

111480

August 6, 2021, 8:14am

3

I did not explicitly use the following code.

torch.backends.cudnn.enabled == True

Is using cudnn default?

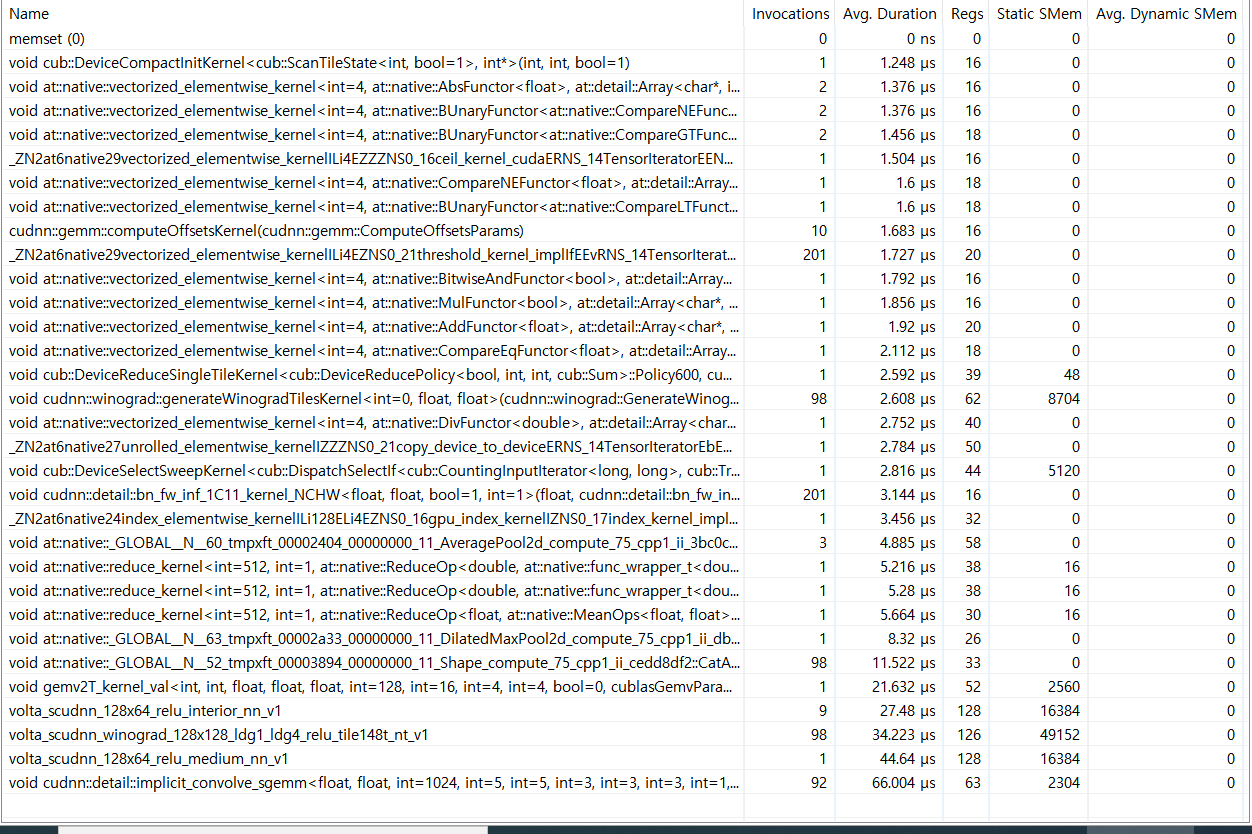

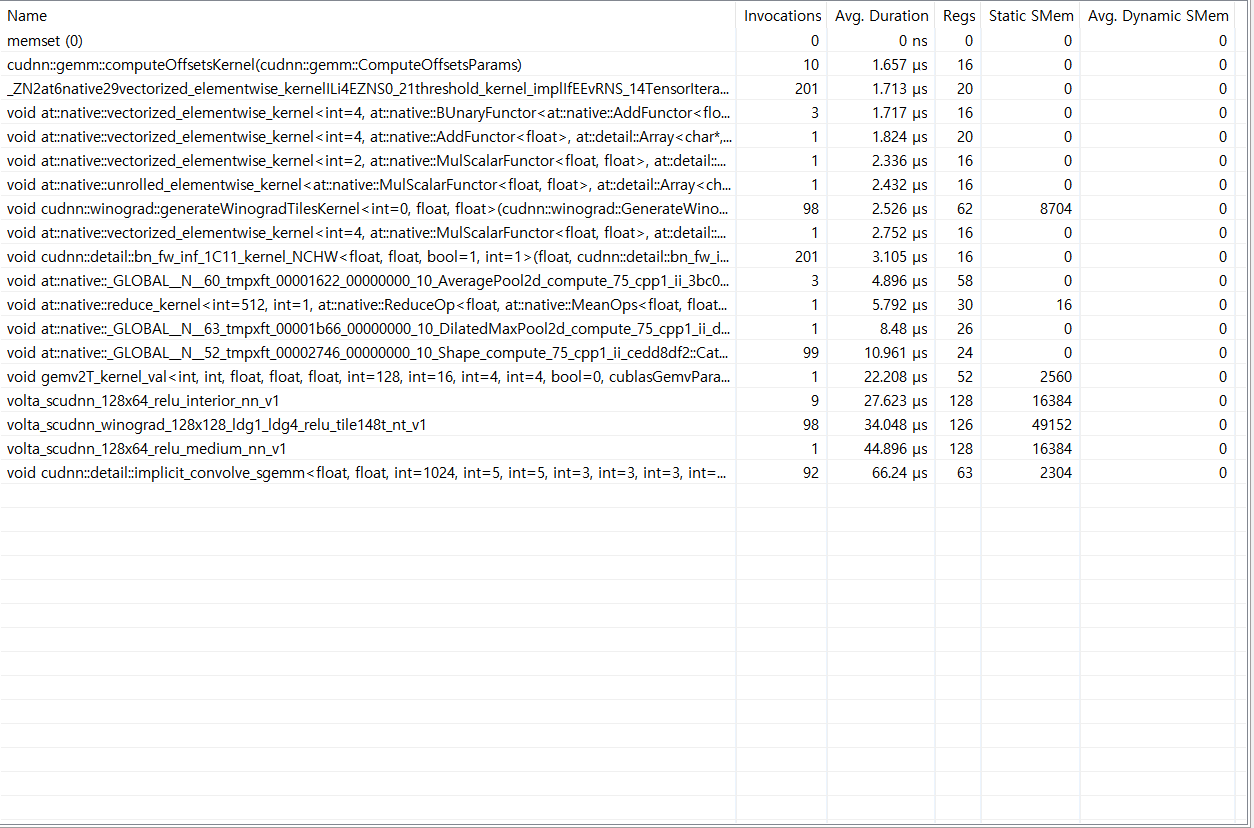

The list of kernels each uses is as follows.

Python

models.densenet201(pretrained=True).cuda().eval()

torch.jit.load(“densenet201_model.pt”).cuda().eval()

C++

torch::jit::load(“densenet201_model.pt”);

Why are all the results different even though it’s the same DenseNet201?