When testing matrix multiplication with pytorch,

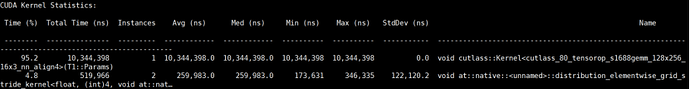

If the scale of matrix multiplication is m=10240,n=5120,k=5120.The cuda kernel used by pytorch matrix multiplication is:

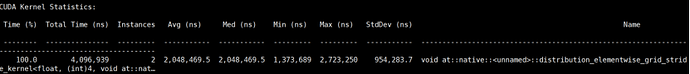

but when the scale of matrix multiplication is m=40960,n=20480,k=10240,the result is:

Question:

when m=40960,n=20480,k=10240,the cuda kernel not in use?

the code is:

import torch

import time

torch.backends.cuda.matmul.allow_tf32 = True

m = 40960

n = 20480

k = 5120

input = torch.randn(m, k, dtype=torch.float32,device=‘cuda’)

weight = torch.randn(k, n, dtype=torch.float32,device=‘cuda’)

output = torch.matmul(input, weight)