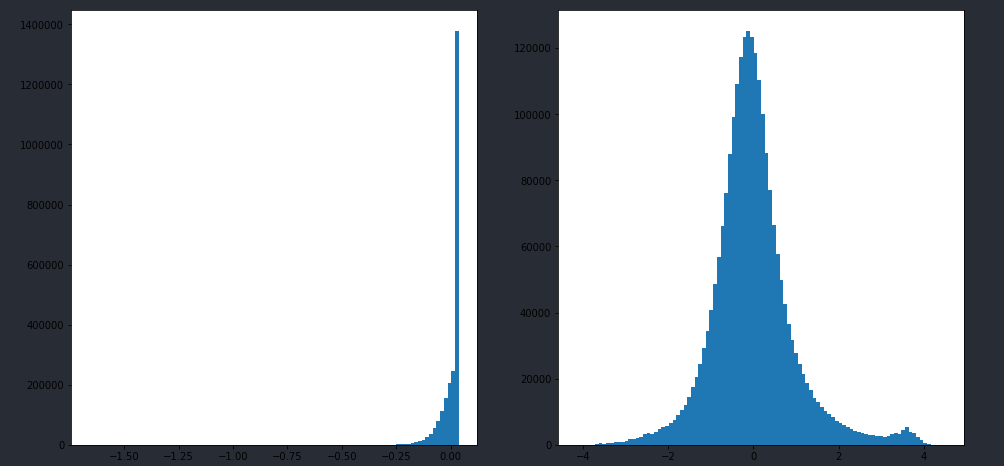

I am using some CNN layers to do regression task with MSE LOSS FUNCTION. the distribution of label is kind of normal distribution, but after few iterations of training, the prediction distribution gets so weird.

the right side is label distribution and left side is predictions’

the model structure

model4(

(conv_channel1): Sequential(

(0): Conv2d(3, 16, kernel_size=(2, 2), stride=(1, 1), padding=(2, 2))

(1): ReLU()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(3): Conv2d(16, 32, kernel_size=(2, 2), stride=(1, 1), padding=(2, 2))

(4): ReLU()

(5): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(conv_channel2): Sequential(

(0): Conv2d(3, 16, kernel_size=(2, 2), stride=(2, 2), padding=(3, 3))

(1): ReLU()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(3): Conv2d(16, 32, kernel_size=(2, 2), stride=(2, 2), padding=(3, 3))

(4): ReLU()

(5): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(conv_channel3): Sequential(

(0): Conv3d(1, 8, kernel_size=(3, 3, 3), stride=(1, 1, 1), padding=(2, 2, 2))

(1): ReLU()

(2): MaxPool3d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(3): Conv3d(8, 16, kernel_size=(3, 2, 2), stride=(1, 1, 1), padding=(1, 1, 1))

(4): ReLU()

(5): MaxPool3d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

# the output of above three Conv Layer are flattened and as the input of dense layer

(dense): Sequential(

(0): Linear(in_features=864, out_features=128, bias=True)

(1): ReLU()

(2): Linear(in_features=128, out_features=64, bias=True)

(3): ReLU()

(4): Linear(in_features=64, out_features=1, bias=True)

(5): Tanh()

)

)

any idea is appreciated!