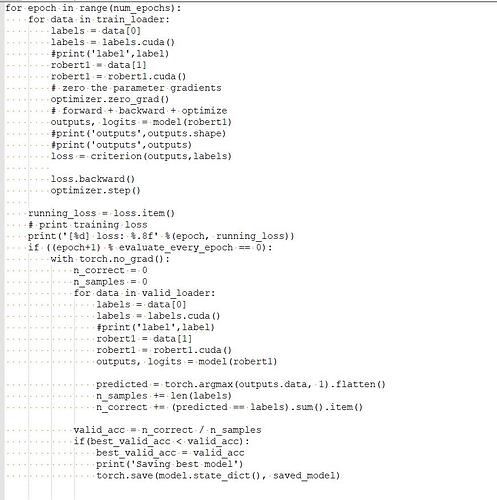

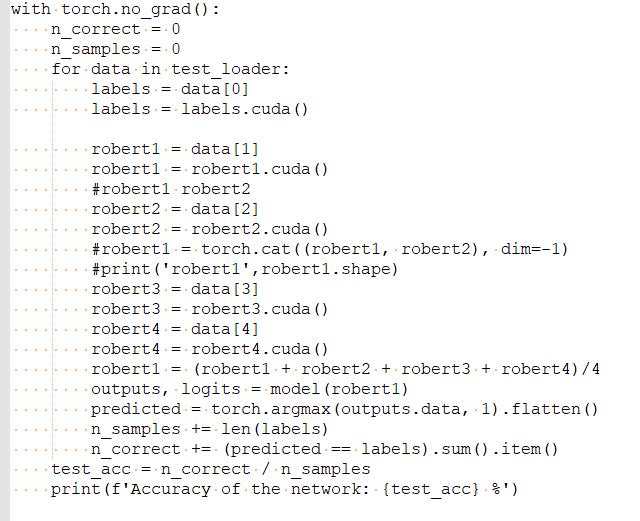

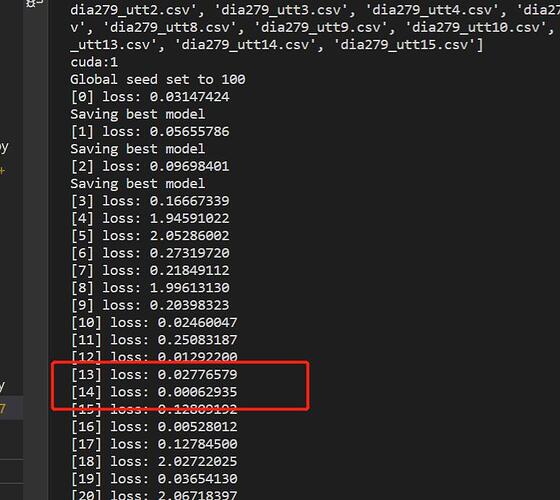

hello everyone. Is there any problem with the code on the training set, the validation set, and the test set? In particular, is there any problem with loss and precision calculation. The loss is printed according to the epoch, and the epoch oscillation on the training set is obvious. thank you all.

It looks like you’re printing only the loss of the last minibatch in epoch… if you want a more accurate representation of the loss, I suggest tracking e.g. all the losses by collecting them into a list, and then taking the average (of maybe the last 100 losses) at the end of epoch and printing that.

However, the loss does seem to fluctuate pretty badly… maybe your minibatch size is very small? Or maybe you’re actually just taking the first example from a minibatch in data[0]?

Going through the training loop line by line with a debugger always helps!

@harphone. Thank you for your advice. To be honest, i am new to DL and pytorch. I don’t know how to print the average loss and accuray for an epoch. Could you please share more detailed code or example, thank you very much

Well it’s kinda hard to modify your code because you shared a screenshot instead of code ![]()

but something like

running_loss_list = list()

for data in train_loader:

...

running_loss_list.append(loss.item())

running_loss = np.mean(running_loss_list[-100:])

running_loss_list = list()

@harpone I am grateful for your detailed help.

Thanks, best wishes