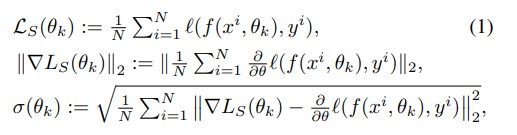

I am training ResNet101 on tiny-imagenet. How to compute gradient l2 norm and the noise of the gradient (see the last two strings) per epoch? L is a classic loss function.

Firstly I need to compute them per iteration and then average. Is it OK for gradient norm?

for batch_num, (X, y) in enumerate(train_loader):

X = X.to(device)

y = y.to(device)

optimizer.zero_grad()

y_pred = model(X)

loss = loss_fn(y_pred, y)

loss_value += loss.item()

loss.backward()

optimizer.step()

# Compute gradients for batch

all_batch_gradients = []

for param in model.parameters():

all_batch_gradients.append(param.grad.view(-1,1).to("cpu"))

all_batch_gradients = torch.concat(all_batch_gradients)