Hi everyone,

I am doing a task about depth estimation. At the beginning, my network is ended with a ReLU layer. But every time I trained it with a single sample, I found the parameters became zero after the first optimizer.step(). After I removed the last ReLU layer, result became good. I am pretty sure this has nothing to do with inplace operation. Both my inputs and targets are normalized, and all the parameters are well pretrained(resnet50) or initiated(Xavier_normal_).

What could be the cause of the problem?

Can you provide some code for the model? If you are saying the parameters become zero after the optimizer step, it means the parameter update equation i.e w := w - alphagrad_w, alphagrad_w is becoming = w if the implementation is correct.

import torch

import math

import torch.nn as nn

import torch.nn.functional as func

import resnet as myResnet

class upConv(nn.Module):

def __init__(self,ins,outs):

super(upConv,self).__init__()

self.conv55 = nn.Conv2d(ins,outs,kernel_size = 5,padding = 2)

n = self.conv55.kernel_size[0]*self.conv55.kernel_size[1]*self.conv55.out_channels

self.conv55.weight.data.normal_(0,math.sqrt(2./n))

def forward(self,x):

assert len(x.size()) == 4

size = x.size()

out = torch.zeros(size[0],size[1],size[2]*2,size[3]*2,requires_grad = True).cuda()

col = [i*2 for i in range(size[3])]

for row in range(size[2]):

out[:,:,2*row,col] = x[:,:,row,:]

out = self.conv55(out)

out = func.relu(out,inplace = False)

return out

class fcrn(nn.Module):

def __init__(self):

super(fcrn,self).__init__()

self.firstHalf = myResnet.resnet50()

self.upConv_1024_512 = upConv(1024,512)

self.upConv_512_256 = upConv(512,256)

self.upConv_256_128 = upConv(256,128)

self.upConv_128_64 = upConv(128,64)

self.bn_64 = nn.BatchNorm2d(64)

self.bn_1024 = nn.BatchNorm2d(1024)

self.conv11 = nn.Conv2d(2048,1024,kernel_size = 1)

self.conv33 = nn.Conv2d(64,1,kernel_size = 3,padding = 1)

self.maxPool = nn.MaxPool2d(3,stride = 2,padding = 1)

for m in self.modules():

if isinstance(m,nn.Conv2d):

n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels

m.weight.data.normal_(0, math.sqrt(2./n))

elif isinstance(m,nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()

def forward(self,x):

out = self.firstHalf(x)

out = self.conv11(out)

out = self.bn_1024(out)

out = self.upConv_1024_512(out)

out = self.upConv_512_256(out)

out = self.upConv_256_128(out)

out = self.upConv_128_64(out)

out = self.conv33(out)

# out = func.relu(out,inplace = False)

return out

Thank you for your reply.

This is my model. myResnet is the official resnet without fc layer. I had initiated the upConv layer with the same method in resnet, but I got the same bad result.

And I’d also like to know how to vectorize the following code in forward function of class upConv.

out = torch.zeros(size[0],size[1],size[2]*2,size[3]*2,requires_grad = True).cuda()

col = [i*2 for i in range(size[3])]

for row in range(size[2]):

out[:,:,2*row,col] = x[:,:,row,:]

Can you try printing out output of some layers of your model and checking everything is working fine.

For the second part, you can try to use ‘fold’ function provided by pytorch to concatenate blocks.

Thanks, but I am confused about the second part. I can’t find ‘fold’ function in pytorch. 'fold' in dir(torch) or 'fold' in dir(torch.Tensor())) is False. I guess you mean unfold function?

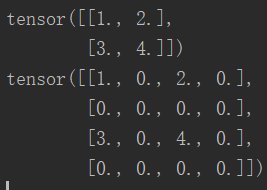

For example, I want to get the second tensor using the first one as the following figure shows. What should I do without for loop(extremely slow)?