Hi.

I am running a NAS (neural network search). The capacity of these models are too large even with batch_size =1 accounting for 18GB memory for a single GPU.

I have 4GPUs with with 12GB memory (48 GB totaly), how to run these code?

Depending on the model architecture, you could try to apply model sharding as shown in this exmaple.

This approach would store submodules on different devices and transfer the output to the corresponding device in the forward method.

Would this be possible or is a single layer already creating the OOM issue?

Thank you for your reply. In network perimeter training , the gpu0 were fully used, but gpu1,2,3 half but it works. when network architecture searching, it also create oom. In another words:

epoch < 5 :Data (4,3,224,224) GPU0:12GB GPU1~3 5GB

epoch >=5: Data(4,3,224,224) and Architect[(20,8),(12,4,3)]

How to do that or relax the gpu0 burden into others?

How did you split the model for the first runs?

Did you manually “chopped” the model in different parts and pushed each to a different device?

If so, try to move more layers/submodules to other devices.

I’m not sure, how the Architect[(20, 8), (12, 4, 3)] class works exactly, but I assume it’s creating different submodules?

actually, the code is a implementation of autodeeplab. I follow your instruction to modify my code:

class Architect_search(nn.Module):

def __init__(self,archi_search,data_model):

super(Architect_search, self).__init__()

self.archi_model = nn.DataParallel(archi_search,device_ids=[0,1]).to('cuda:0')

self.data_model = nn.DataParallel(data_model,device_ids=[2,3]).to('cuda:2')

def forward(self, data_image,search_image ):

search_result = self.archi_search(data_image)

train_result = self.data_model(search_image)

return search_result,train_result

bug got errors:

RuntimeError: module must have its parameters and buffers on device cuda:0 (device_ids[0]) but found one of them on device: cuda:2

specifically, the architect_search governs part of parameters of data_model.

class Architect_search (nn.Module) :

def __init__(self, data_model, args):

super(Architect_search, self).__init__()

self.network_momentum = args.momentum

self.network_weight_decay = args.weight_decay

self.optimizer = torch.optim.Adam(model.arch_parameters(),

lr=args.arch_lr, betas=(0.5, 0.999), weight_decay=args.arch_weight_decay)

self.data_model = data_model

def forward(self, input_valid):

logits = self.model(input_valid)

return logits

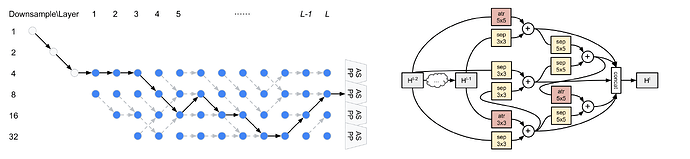

data_model is a common nn.Module class. arch_parameters will return [(20,8),(12,4,3)]

20 potential connection x 8 opperation types every block. 12 layers x4blockx3concatnation

If you use different modules on different GPUs, you would also have to transfer the tensors in the forward to the device as given in the example.

In your case search_result should be transferred to 'cuda:2'.

Thank you . It works.