I want to frozen the weight of pretrained vgg19, and just update the last fc layer when backpropagation

The model is shown in following block. I have already set with torch.no_grad(): when I input the images into vgg19.

class ImgEncoder(nn.Module):

def __init__(self, embed_dim):

super(ImgEncoder, self).__init__()

self.model = models.vgg19(pretrained=True)

in_features = self.model.classifier[-1].in_features

self.model.classifier = nn.Sequential(*list(self.model.classifier.children())[:-1]) # remove vgg19 last layer

self.fc = nn.Linear(in_features, embed_dim)

def forward(self, image):

with torch.no_grad():

img_feature = self.model(image) # (batch, channel, height, width)

img_feature = self.fc(img_feature)

l2_norm = F.normalize(img_feature, p=2, dim=1).detach()

return l2_norm

model = ImgEncoder(embed_dim=300)

the question is when I using the optimizer, how should I feed the parameter

optimizer = optim.Adam(model.parameters(), lr=0.001)

or

optimizer = optim.Adam(model.fc.parameters(), lr=0.001)

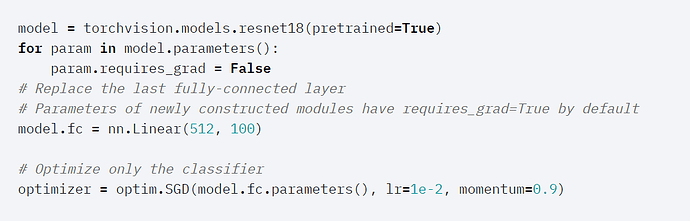

This question is similar to the tutorial in official website like following:

Why we can’t simply put the

model.parameters() but model.fc.parameters() instead ?